| Key messages | |

| • | Combining two types of impacts frameworks – societal goals and descriptors of changes – allowed us to understand how the research projects contributed to broad societal goals, not just whether they addressed the goals. |

| • | The UN Sustainable Development Goals generally captured the goals of the project participants’ research well, but project participants were also interested in developing goals more specific to their institute that incorporated input from the societal partners involved in their research. |

| • | Despite practising various forms of engaged scholarship, project participants were new to the concepts of societal impacts, and at times found it difficult to describe and provide evidence of impact; therefore, additional professional development is required prior to adopting societal impacts evaluation within the organisation. |

Introduction and background

As our communities and societies face existential challenges related to climate change, racism, disease and human well-being, among other stressors, universities, researchers and funders are increasingly asking how research can more effectively advance positive changes in society and the environment that address these challenges. The ways in which research contributes to beneficial social and environmental changes are broadly defined as the societal impacts of research. (We use the term ‘social’ to indicate problems or impacts related primarily to people, and ‘societal’ as a broader term that encompasses humans, the physical environment, and the linkages between the two.) However, too often, academic research remains divorced from its applications to tangible problems. Over the past several decades, calls to scientists to engage with society to address global challenges have increased (see, for example, Association of Public and Land-Grant Universities, 2019; Beyond the Academy, 2022; Brink, 2018; Lubchenco and Rapley, 2020). The persistence of these calls for engagement indicates that we have not yet closed the gap between academic research and its use in society.

Some reasons the gap persists include: the now-outdated perception that good science should be separated from social influence (Cvitanovic et al., 2019); misalignment of academic reward structures, so that engagement and impact are undervalued (Alvesson et al., 2017; Association of Public and Land-Grant Universities, 2019; Ozer et al., 2023); and failures of academic training to impart the skills necessary to generate, describe and demonstrate research impacts (Bayley and Phipps 2019a). To support researchers’ and institutions’ efforts to conduct more societally impactful research, we sought to develop and test a research impacts evaluation framework that promotes reflection and learning, and that strengthens engagement and impact. By integrating this type of framework into standard academic practice, we argue that the academic community can serve society more effectively and genuinely.

The practice of societally engaged scholarship is relatively new in many academic disciplines (although it is well-established in several). As the research community expands into this space, a system that encourages reflection and learning is crucial to improving our collective ability to produce usable knowledge and mobilise that knowledge into action. The types of impacts documented, and the process by which they are measured, point to an organisation’s goals and priorities. By making research impacts evaluation more accessible to research organisations, we hope that it becomes an effective tool to solidify the place of engaged scholarship and impacts-focused research in mainstream academia.

This article reports on a pilot project to design and test a research impacts framework that provides insights to researchers about their work, and offers organisational leaders an overview of their organisation’s impacts. We designed this framework using research impacts frameworks (including, but not limited to, Fazey et al., 2014; Gunn and Mintrom, 2017; Meagher et al., 2008; Smit and Hessels, 2021) and knowledge-use theories (Edwards and Meagher, 2020; Pelz, 1978; Weiss, 1973), and tested it via a survey-based tool with investigators from 12 projects in one interdisciplinary research institute at a large US university. The work that occurs within this institute aims to be use-inspired, engaged and impactful among the communities within which the research is done. Yet, like many such research institutes, it does not have a cohesive approach for documenting or assessing the impacts of its work on the immediate community and society at large. The pilot test revealed a wealth of societal impacts emerging from the 12 projects, indicating that the impacts framework is effective in eliciting examples and evidence of impact. However, the pilot test also revealed weaknesses in our initial survey-based tool, which was less effective than anticipated, particularly with researchers new to impacts assessment.

Growing interest in research impacts

Interest in the societal impacts of research has grown rapidly over the last two decades. This growth is driven by external forces such as national funding agencies, which have created frameworks such as the UK’s Research Excellence Framework (https://www.ref.ac.uk/) and the US National Science Foundation’s broader impacts categories (https://new.nsf.gov/funding/learn/broader-impacts), and smaller funding agencies wanting evidence of change made by the projects they support (Arnott et al., 2020; Hudson et al., 2023). Growth is also driven by internal institutional commitments, such as land-grant missions in the US, calls for a commitment to public service (Association of Public and Land-Grant Universities, 2019), or achieving the United Nations Sustainable Development Goals (SDGs) (for example, University of Minnesota–https://sdg.umn.edu/). Realigning research goals in ways that emphasise community engagement and public service can help researchers move away from extractive modes of research that harm minoritised and underserved communities (Britton and Johnson, 2023). Researchers who undertake engaged scholarship are also seeking ways to demonstrate and communicate the impact of their work beyond the purely academic realm (Andrews, 2022; Drame et al., 2011; Foster, 2010). Finally, there is interest in using societal impact evaluation as a learning and research tool to strengthen the field of engaged scholarship by shedding light on the pathways from research to impact (Penfield et al., 2014).

Examples of impacts evaluation frameworks in use or being tested have been documented in several comprehensive reviews (Greenhalgh et al., 2016; Louder et al., 2021; Pedersen et al., 2020; Penfield et al., 2014; Smit and Hessels, 2021). Below, we discuss elements of these frameworks that we used to construct our societal impacts evaluation framework for use in an environmental research institute.

Two main types of evaluation frameworks support these research impact goals, which can be broadly categorised as assessment-driven or mission-driven (Bayley and Phipps, 2019a). Assessment-driven frameworks focus on evaluation for accountability and transparency, and are often implemented when public resources support research activities (Muhonen et al., 2020; Pedersen et al., 2020; Penfield et al., 2014). In addition to holding research organisations accountable for past funding, these evaluations help funders make informed choices about future funding (Penfield et al., 2014). Assessment-driven frameworks result in data summarising what and how many impacts occurred in connection to specific research efforts.

Mission-driven frameworks aim to ensure that research supports genuine societal engagement and encompass benefits beyond traditional economic measures (Muhonen et al., 2020). These evaluations encourage meaningful connections between researchers and society (Spaapen et al., 2011); help researchers address global challenges (Jensen et al., 2021); and embed an ethos of service into the research enterprise (Edwards and Meagher, 2020; Rickards et al., 2020). Mission-driven frameworks place more emphasis on how and why a research effort contributes to societal change through engagement, providing usable knowledge or developing trust between the research enterprise and broader society.

Challenges of impact evaluation

Despite increasing demands for research impact evaluation, such work is not without challenges. One concern involves perceptions that removing boundaries between science and society will dilute the integrity of research (Cvitanovic et al., 2019), or that emphasising societal impacts will devalue and divert resources from fundamental research (Chubb and Reed, 2018; Stern, 2016; Watermeyer and Chubb, 2019). Others are apprehensive about evaluation threatening their academic freedom by allowing external influence over research agendas (Watermeyer and Chubb, 2019). However, funding agencies have always had significant influence on research agendas, and societal values and interests have always influenced which research is valued, funded and undertaken (Greenwood and Levin, 2007; Jasanoff and Wynne, 1998).

Another challenge is that impact does not have a common definition or foundation that is accepted across different institutions, countries and research disciplines (De Jong et al., 2020; Jagannathan et al., 2020; Pedersen et al., 2020; Spaapen and Van Drooge, 2011). This is particularly noticeable when comparing impact definitions in science, technology, engineering and mathematics (STEM) and social sciences and humanities (SSH) fields. STEM can be more easily quantified, often in economic terms, while SSH requires a more nuanced understanding of knowledge sharing and decision sciences to identify impacts (De Jong et al., 2020; Modern Language Association of America 2022; Muhonen et al., 2020). Societal impacts via arts and culture are not well-developed in existing frameworks; however, as examples captured through the Research Excellence Framework illustrate, fine arts have significant positive impacts.

The process of moving knowledge into use is rarely straightforward, and it poses additional challenges for evaluating research impacts. Significant time lags can occur between a project’s end and the adoption of new practices or other types of change (Adam et al., 2018; Penfield et al., 2014; Spaapen and Van Drooge, 2011). It takes time to translate research results into usable formats, and for organisations to be ready to integrate new knowledge into practice (Greenhalgh et al., 2016; Oliver et al., 2014; Pedersen et al., 2020). These challenges make it difficult to attribute change directly to a research finding or project (Adam et al., 2018; Penfield et al., 2014; Spaapen and Van Drooge, 2011). Research is only one of several factors that create societal change. Therefore, research evaluation often relies on contribution analysis to gauge influence on practitioner decisions or societal changes (Morton, 2015). A contribution framework acknowledges how multiple factors interact to create measurable change, while also identifying the ways that research efforts support that process.

The complex nature of research impacts also adds methodological challenges for data collection and evaluation. Sometimes collecting tangible pieces of evidence is simple, such as bibliometric analyses of research outputs used in policy documents (for example, Beard et al., 2023; Bornmann et al., 2016). However, less tangible impacts, such as identifying changes in understanding or attitude, require qualitative methods that allow project participants to reflect on their experiences. Unanticipated or emergent impacts are also more likely to be identified through open-ended qualitative inquiry (Meagher and Martin, 2017; Spaapen and Van Drooge, 2011). Impact evaluation should also incorporate experiences of societal partners who were involved in, or benefited from, the research (Adam et al., 2018; Gunn and Mintrom, 2017). Partners can confirm that impacts occurred, add richness about the impacts they experienced, and describe new impacts not previously documented by researchers. Gathering this feedback requires additional analysis, analytical skills, time and resources (Adam et al., 2018).

Finally, if research impact evaluation is implemented without adequate capacity, time or skills, it risks negative effects for researchers, their societal partners and their institutions. If impact evaluation becomes a ‘box-ticking’ exercise, rather than being part of a genuine commitment to engagement with society, societal partners can be harmed by ineffective engagement.

We sought to build on successful elements of existing frameworks and mitigate the challenges of impacts evaluation by developing a framework that: emphasises learning, practice improvement and tangible changes for societal partners; employs categories of impacts that are robust across disciplines; uses narrative and qualitative descriptions to highlight the research context and the complex processes involved in moving research into use; and makes expectations for evidence clear, but not rigid, to allow for a variety of data collection approaches.

Aims and objectives

Our context

The environmental institute assessed in this pilot study is situated in a land-grant university in the US with a Carnegie Classification of R1 (doctoral universities – very high research activity). US universities do not have a federal mandate to report impacts. Despite the lack of mandate, there is a groundswell of interest in the concept of societal impacts among US researchers and funders (Advisory Committee for Environmental Research and Education, 2022; Tseng et al., 2022).

Project aims

We sought to develop and pilot test a societal impacts framework that would help researchers, academic departments and universities to understand the societal impacts they generate, and identify opportunities for expansion of impactful work. The framework could inform individual projects, support funding applications (through evidence of past impact and informing impact plans), guide programmatic decision making, and be incorporated into performance reviews and promotion and tenure processes. Our efforts built upon previous societal impacts evaluations of engaged environmental research projects (Mach et al., 2020; Meadow and Owen, 2021; Owen, 2020, 2021; VanderMolen et al., 2020; Wall et al., 2017) to expand adoption of evaluation practices into new programmes, disciplines and contexts.

We intended this pilot test to include three elements: (1) a societal impacts framework applicable to inter- and transdisciplinary environmental research efforts; (2) a framework that included both societal impact goals and descriptors of change; and (3) a user-friendly process to document impacts. To test the framework, we developed an impacts reporting tool in the form of an online survey using Qualtrics software.

Our framework addressed Elements 1 and 2 by combining components from assessment-driven and mission-driven frameworks. We framed the assessment-driven component as ‘societal impact goals’, and asked researchers to identify the United Nations Sustainable Development Goals (SDGs) to which their work contributed. Our intention was to make the connection between research and society more evident by asking researchers to identify the societal purpose of their work, using an established global framework (Willson, 2022). We chose the SDGs because they are becoming a lingua franca for describing research impacts (Chankseliani and McCowan, 2021; Hourneaux Junior, 2021; Sianes et al., 2022). The societal impact goals element of the framework helps researchers identify what their research has contributed to.

We framed the mission-driven component as ‘societal impact descriptors’, using an impact typology similar to one used by the UK Economic and Social Research Council, a system to evaluate social science and humanities research in Europe (Edwards and Meagher, 2020; Meagher et al., 2008). The framework, which is grounded in theories of knowledge use and research impact (Pelz, 1978; Weiss, 1973), includes six impact categories: instrumental, conceptual, capacity building, connectivity, social and environmental impacts (see Appendix for definitions). This typology presents societal impacts as a range of tangible and intangible changes (conceptual, capacity building, connectivity and instrumental impacts) that interact with each other to contribute to larger scale social and/or environmental changes. The impact descriptors element of the framework helps highlight how the research has contributed to society.

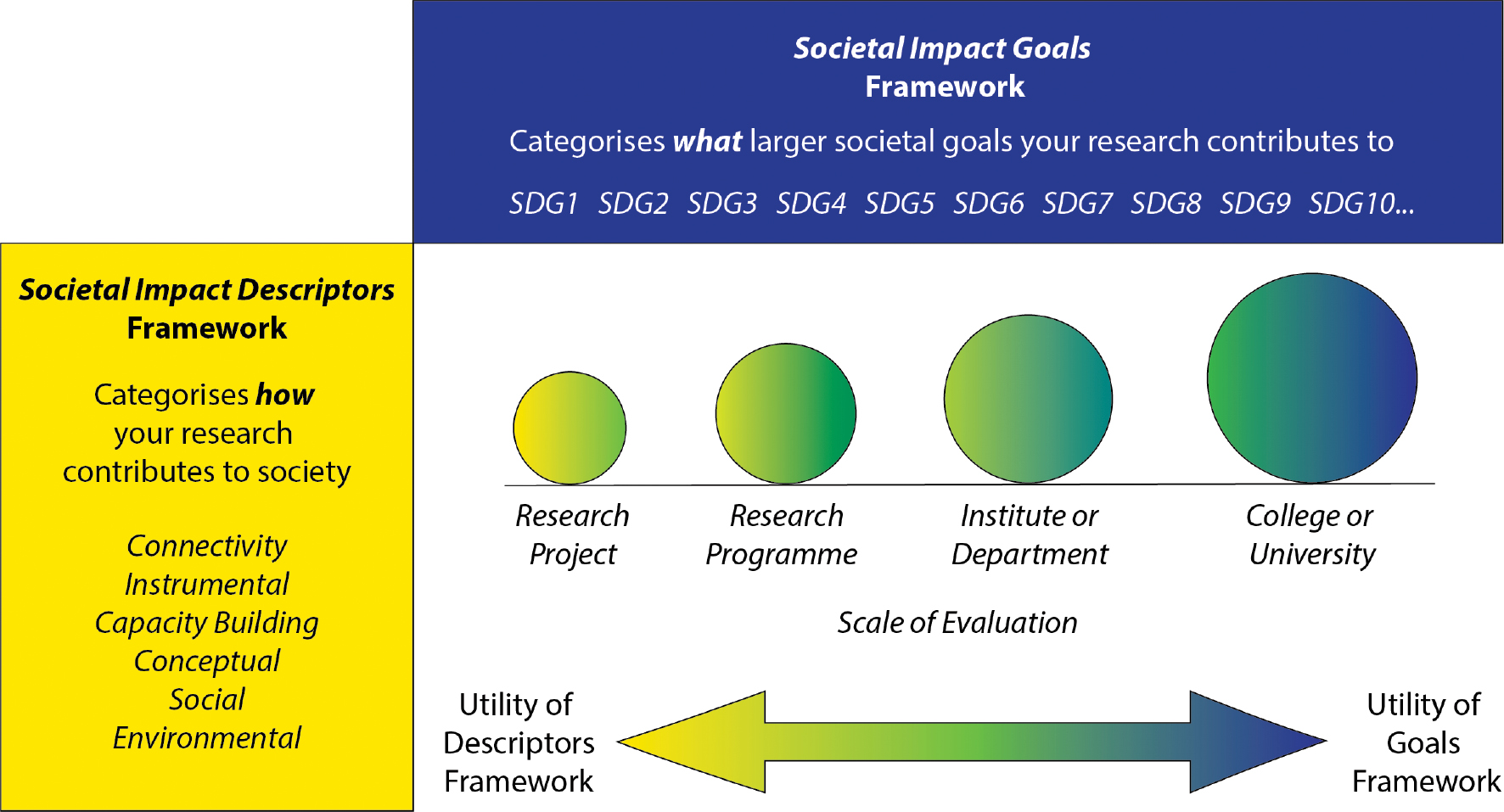

We suggest that each type of framework may have relatively more or less utility depending on the scale of analysis (see Figure 1). Impact descriptors may be more useful when describing how an individual project contributed to societal changes. While individual projects can contribute to broad societal goals, they are unlikely to move the needle on a societal scale, except under extraordinary circumstances. Conversely, institutions may derive benefit from aggregating individual projects into societal goal categories to demonstrate their broader impact, while a cumulative tally of ‘how’ impacts, such as contributions to changes in attitudes or understanding, may be less effective. When both impacts frameworks are combined, one reporting mechanism may be capable of fulfilling multiple institutional functions.

Methods

We designed the online reporting tool to include five sections that asked about the social or environmental problem(s) addressed by the project, how researchers engaged with societal partners, project activities and outputs, the impacts to which the research contributed (impact descriptors), and the project’s contributions to societal goals (SDGs). See Table 1 for a summary of the survey questions.

Societal impacts survey questions

| Investigators’ names and affiliations |

| Geographical focus of project |

| Project start and end dates |

| Who are your project partners or collaborators? |

| Project abstract |

| Research methods – brief summary |

| Research findings – brief summary |

| What social or environmental problem did you aim to address in your research? |

| Research outputs: Which of the following outputs did you produce through your research? Select all that apply. [list of possible research outputs, including an option for ‘other’] |

| Describe the outputs you produced through your research. Provide links or citations if available. |

| Societal impacts: What changed because of your research activities, your interactions with partners, your research findings, or your outputs? Select all that apply. [list of impact descriptor categories and definitions; see Appendix for details] |

| Describe what changed because of your research activities, your interactions with partners, your research findings or your outputs. In your description, include for whom things changed or who changed because of your research. |

| Do you think your project contributes to any of the Sustainable Development Goals? It may not be an exact fit, but select those with the best fit. Select all that apply. [list of UN SDGs] |

| Please briefly describe how and why your project contributed to these SDGs. |

| If you stated that you did not think your project fits into one of the Sustainable Development Goals, please suggest a societal impacts goal to which you think your project does contribute. |

| Please feel free to note any additional thoughts/impacts/questions that this survey may not have asked about, but that you think are important for your project. |

Participation in testing the impact evaluation framework was voluntary. No financial or other institutional requirements demanded participation. This factor added to the imperative of Element 3, making the reporting tool user-friendly and not burdensome. We used a combination of closed- and open-ended questions to give the reporting tool a clear structure, while inviting richer descriptions of impact and allowing for non-linear and lengthy pathways to impact (Pedersen et al., 2020) (see Table 1). We also embedded explanations of new concepts, such as the impact descriptor categories, into the reporting tool, and organised it to walk participants step-by-step through project goals, activities, outcomes and impacts.

The reporting tool was open for four months in early 2022. To recruit participants, we spoke with institute researchers at staff meetings, and sent detailed emails that included an introduction to impacts evaluation and a copy of the reporting tool. We received 12 project submissions representing 8 of the 13 research programmes housed within the institute (62 per cent programme response rate). The institute does not maintain a census of individual research projects conducted under its auspices, so we were unable to determine the total number of projects within the institute.

We offered meetings with anyone who wanted more information or additional guidance on the reporting process. Representatives from 9 of the 12 projects requested to meet with us prior to submitting their responses (one representative reported on two different projects). Meetings consisted of reviewing the purpose of our pilot project, providing background on societal impacts and talking through questions in the tool. For 4 projects, this was enough information for representatives to complete their responses on their own. In 5 other cases, we drafted responses with project representatives during the meeting, then sent them a copy to update or refine as necessary. The remaining 3 project representatives completed the reporting tool on their own.

This study was classified as programme evaluation/quality improvement, and did not require oversight from the Institutional Review Board. However, we assured participants that their responses would be de-identified and remain anonymous in publications. All participants consented to having their projects identified in separate internal institutional documents.

Findings

Effectiveness of the Impacts Framework

Societal partners and engagement

Projects reported partnering with a wide range of government agencies, community organisations and industry representatives. The 12 projects listed 46 different societal partners, including community and non-profit organisations, federal government agencies and tribal government agencies. Most projects took place within the US, 2 were based on Native Nations, and 2 included international settings. Projects employed a range of engagement activities and impact pathways, including working with students to develop academic skills, citizen science and co-producing project design, knowledge and outputs with partners.

Impact categories

All 12 projects reported impacts in two or more of the six impact descriptor categories. Some projects reported multiple examples within a single category. However, most projects did not report a comprehensive set of impacts. Therefore, here we present impacts by category per project, rather than the total number of examples reported per category. We attribute this inconsistency to respondents’ unfamiliarity with this kind of impacts reporting, and we discuss the implications in greater detail below.

Instrumental, connectivity, conceptual and capacity-building impacts can occur relatively quickly during a project, so more impacts are often reported in these categories (Edwards and Meagher, 2020). Social and environmental impacts often take longer to emerge, because they depend on research uptake, policy and behaviour change, and social and environmental system response times. However, two social impacts and two environmental impacts emerged from the 12 projects.

Of the projects, 10 demonstrated instrumental impacts through use of their research findings in plans and policies; 11 projects provided examples of conceptual impacts or changes in their partners’ knowledge about, or awareness of, the research topic; 9 projects provided examples of connectivity impacts by demonstrating relationships, partnerships or networks that endured after their project ended; and 8 projects described capacity-building impacts, such as changes in skills, expertise or resources of project partners (see Appendix).

Emergent impact subcategory

An additional subcategory of impact emerged from the data, which we labelled ‘higher education impact’. Higher education impacts were changes that occurred within the function or culture of a higher education institution because of the project. Each instance of this subcategory co-occurred with one of the six established impact descriptor categories – such as capacity building in higher education. Examples included adoption of culturally appropriate curriculum materials for Indigenous students and a project’s contribution to an institution’s efforts to increase Indigenous faculty hires. This subcategory is somewhat analogous to the category ‘attitude impact’ used by Edwards and Meagher (2020), which they define as changes in researchers’ attitudes towards transdisciplinary research. In this case, the attitude change occurs at the institutional scale.

Contributions to the SDGs

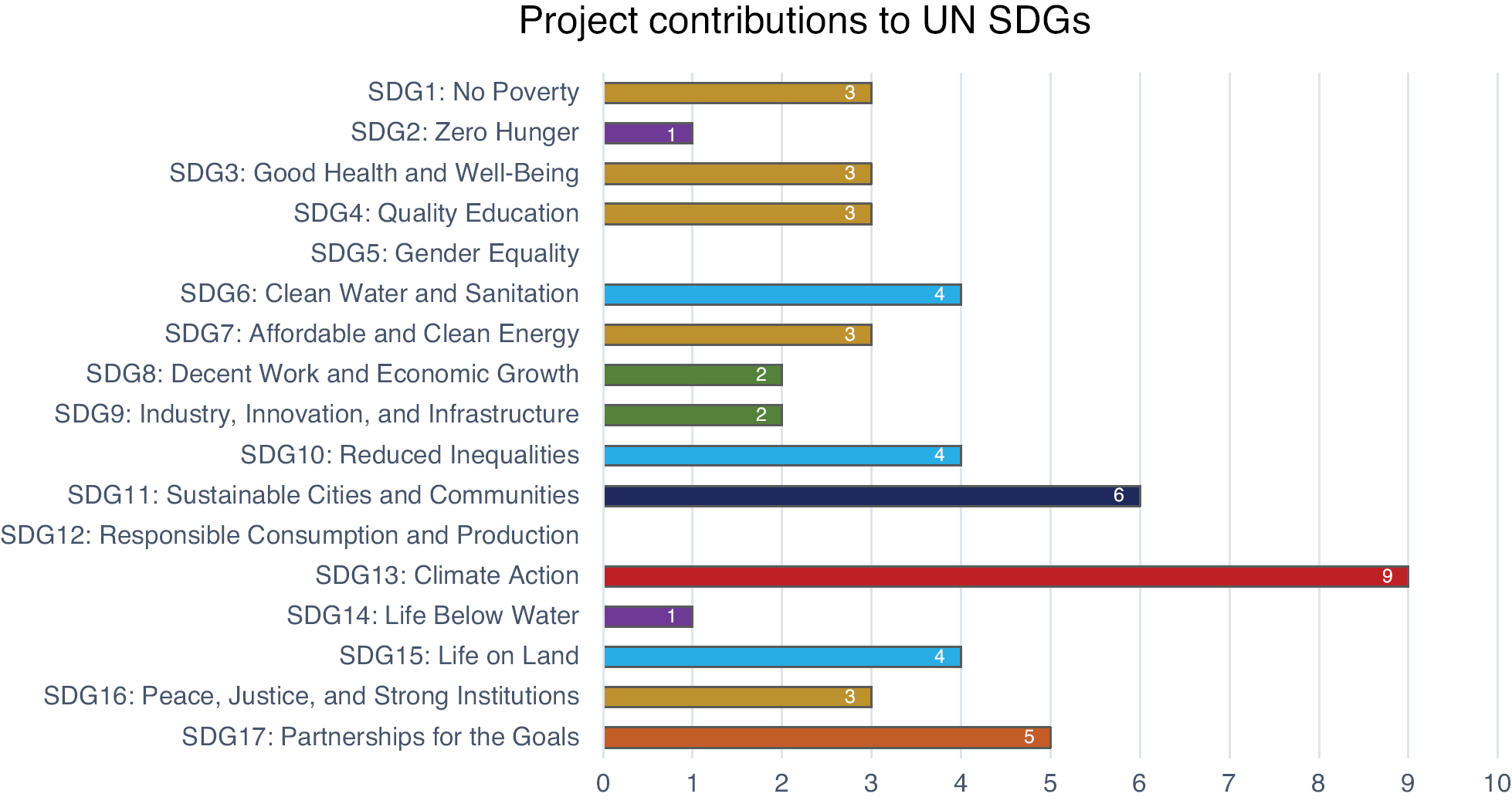

Collectively the 12 projects in our pilot test addressed 15 of the 17 SDGs (Figure 2). Respondents could select and explain their contributions to as many SDG categories as applied. The largest contribution (9 projects) was to SDG 13: Climate Action. Other common SDGs were SDG11: Sustainable Cities and Communities (6 projects), SDG 6: Clean Water and Sanitation (4 projects) and SDG 10: Reduced Inequalities (4 projects).

Tangible impacts towards the SDGs

After counting the number of SDGs addressed by the 12 projects, we explored the types of impacts that projects had towards each SDG by cross analysing the impact descriptors and the SDG societal goals. This analysis provided insight into the specific contributions of the institute towards the SDGs. We determined that each impact reported in a project could contribute to multiple SDGs associated with the project. For example, a project that reported instrumental, conceptual and capacity impacts, and reported a contribution to SDG 13: Climate Action, was counted as three separate types of impact towards that goal.

SDG 13: Climate Action (24 impacts) and SDG 11: Sustainable Cities (17 impacts) had the most reported impacts overall. Of the 12 projects, 6 reported changes in plans, policies or practices for both of these SDGs; 7 projects made conceptual contributions to Climate Action by helping to change awareness and understanding; and 2 projects contributed to Climate Action via direct environmental benefits by improvements to ecosystem health. Projects also contributed to SDG 1: No Poverty, SDG 2: Zero Hunger and SDG 3: Good Health and Well-Being through direct social impacts by increasing food security and reducing energy costs for communities. Table 2 presents the impact categories reported by the 12 projects for each SDG.

Societal impact descriptor categories for each SDG as reported by 12 pilot projects

| UN Sustainable Development Goals | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | |

| Impact Descriptors | |||||||||||||||||

| Instrumental | 3 | 0 | 2 | 1 | 0 | 4 | 2 | 0 | 1 | 2 | 6 | 0 | 6 | 0 | 1 | 2 | 0 |

| Conceptual | 0 | 0 | 1 | 2 | 0 | 2 | 2 | 0 | 1 | 1 | 5 | 0 | 7 | 1 | 4 | 1 | 1 |

| Capacity Building | 2 | 0 | 2 | 3 | 0 | 3 | 1 | 2 | 0 | 1 | 2 | 0 | 5 | 0 | 1 | 3 | 0 |

| Connectivity | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 2 | 0 | 1 | 2 | 4 |

| Environmental | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 2 | 0 | 1 | 0 | 0 |

| Social | 1 | 2 | 1 | 0 | 0 | 1 | 1 | 0 | 1 | 1 | 1 | 0 | 2 | 0 | 0 | 1 | 0 |

| Total | 6 | 2 | 6 | 8 | 0 | 10 | 6 | 2 | 3 | 5 | 17 | 0 | 24 | 1 | 8 | 9 | 5 |

One example of a project generating multiple impacts towards Sustainable Cities and Climate Action was the use of aircraft- and satellite-based imaging to detect, pinpoint and quantify methane emissions from regions around the US. Researchers shared methane data from the project with partners at federal, state and facility levels, and many partners indicated that they then took action to reduce methane emissions. In one case, an estimated 75,000 metric tonnes of methane per year were eliminated in California because of this research. Emissions data: (1) increased awareness of emissions issues (conceptual impacts); (2) were used to make decisions and develop policies (instrumental impacts); and (3) led to reductions in methane emissions (environmental impacts).

Effectiveness of reporting tool

One goal of this pilot test was to design a robust and user-friendly reporting tool that would capture the range of societal impacts embedded in the framework. While we found the framework to be robust, in that it captured diverse types of research impacts across different types of projects, the reporting tool did not meet our user-friendly goals due to a combination of participants’ novice understanding of research impacts evaluation and insufficient guidance in the online tool to overcome this knowledge gap. The mismatch between the tool and participants’ familiarity with the evaluation process contributed to inconsistencies in their interpretations of impact categories and evidence of impact.

As described in the methods section, representatives from 9 of the 12 projects required some level of engagement with our evaluation team to complete the survey. Responses from project representatives who did not seek survey assistance did not reflect a clear understanding of the impact categories or evidence of impact, such as reporting impacts without evidence. These issues prompted us to re-analyse responses as submitted.

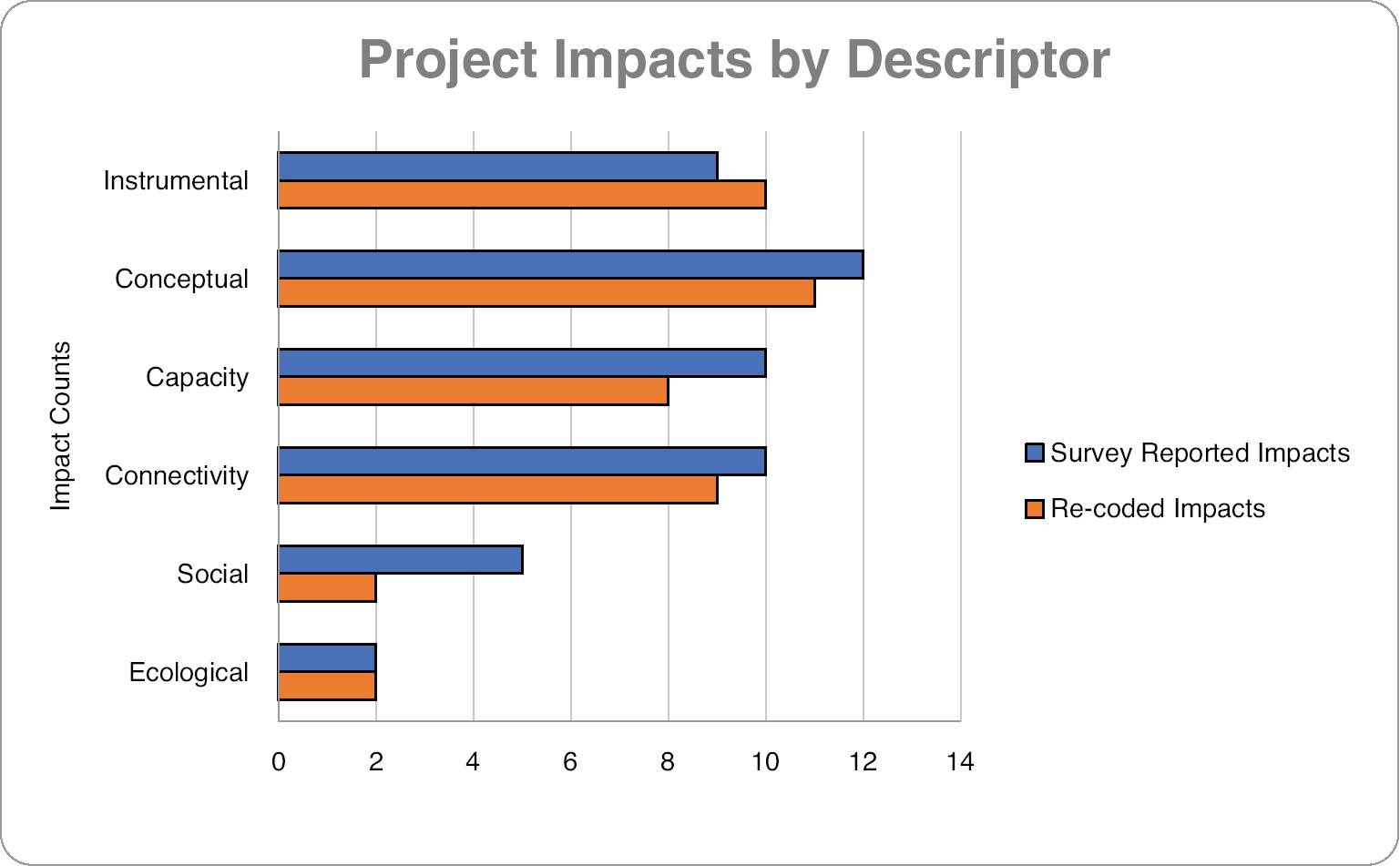

To assess the difference between self-reports and expert analysis, we used a consensus coding process (Creswell and Poth, 2016) to re-analyse each impact reported by project representatives and assign it an impacts category. Our re-analysis aligned with the original impact descriptions about 56 per cent of the time. In Figure 3, we show data from the original entries compared to the results of our re-coding. The data reported in this article reflect the re-analysed data.

Overall, the results of our re-analysed impact categories showed six fewer impacts than reported by survey participants. Some participants identified an output or activity as an impact with no evidence that change had occurred; we removed these from the impacts count. Other participants miscategorised impacts (for example, describing an impact as instrumental, when it fitted better as conceptual); we moved these impacts to their correct impact category. In 3 projects, participants described an impact elsewhere in their response, but did not report it in the impact section; we added these impacts to their appropriate category.

Feedback on the impacts framework

After completing the pilot test and sharing results with the institute’s leadership team through an internal report, we had several discussions with institute staff to explore pilot test findings and gather feedback on the framework. Feedback sessions involved five hour-long focus groups in early 2023, conducted virtually (the standard meeting format for the institute’s staff and faculty). Additional feedback was collected through 18 semi-structured interviews with directors and coordinators from the institute’s 13 programmes, as well as with the institute’s leadership team. Overall, institute leadership and staff supported developing a societal impact evaluation. The internal report resulting from the pilot study was shared as a positive example of research evaluation with other campus departments and programmes. Feedback also contained suggestions for improvements to the framework to make it more applicable to the institute.

In the first focus group session, participants reflected on how well the SDGs and the impact descriptor categories aligned with their research projects or programmes. Participants posted notes to an online whiteboard to indicate one of three responses (yes – well aligned; maybe; or no – not well aligned). They also shared thoughts orally or in written comments using the conferencing chat function. Overall, the SDGs strongly aligned with the societal goals of the institute’s programmes or projects. Each of the SDGs was identified by at least one programme as being relevant to their work. The most well-aligned were SDG 11: Sustainable Cities and Communities (11 yes, 2 maybe); SDG 13: Climate Action (11 yes, 2 maybe); SDG 7: Affordable and Clean Energy (8 yes, 6 maybe); SDG 15: Life on Land (8 yes, 3 maybe); SDG 4: Quality Education (7 yes, 3 maybe). Several participants noted that the title of the SDG was not enough to determine whether it captured their research activities, and they would need to explore the full breadth of the SDG before selecting it as applicable. Based on this feedback, the relevance of each SDG may be undercounted.

Of course, the SDGs are not the final word on societal goals. While the SDGs aligned with most of the institute’s programmes for global goals, participants’ feedback reflected the need for additional sets of goals. Many participants suggested developing a set of institute-level goals and values to better define what the institute as a whole is working towards. These context-based and institute-level goals could then be mapped on to a larger goals framework, such as the SDGs. Other participants emphasised the role of societal partners in defining ‘success’ and goal setting, specifically the need to address partners’ goals and expectations when evaluating project success (for example, Woolley and Molas-Gallart, 2023). A related issue is that impacts should be verified by external partners, and the lack of direct input from partners outside the institute weakened the evaluation process. Creating opportunities to centre partners’ goals reaffirms the project’s purpose as addressing societal needs.

All programme representatives said that the descriptor categories were a good or moderately good fit for their programmes. Additional comments reflected our previous findings about the need for a category to capture the impacts to higher education or culture changes within academia. We shared the definition of the attitude change impact from Edwards and Meagher (2020), which seemed to capture the dynamic that participants described.

Participants also asked about inclusion of economic impacts. We suggest that economic impacts can be included under the heading of social impacts, because they imply a financial benefit to individuals, communities or society as a whole. We also note that metrics for evaluating economic benefits of research or individual projects are well-established and can be used within this framework – or may be evaluated as stand-alone impacts, as is often the case now (Association of Public and Land-Grant Universities, 2019; Pedersen et al., 2020).

As discussed above, we analysed how projects made progress towards the SDGs by combining the societal impact goals with the impact descriptor categories. Feedback from institute leadership and staff favoured this combination of analyses as a useful way to understand tangible progress at the project and institute scale. We found that combining the two frameworks also added rigour to the evaluation process, in that researchers or programmes could not just tick a box to say they are contributing to societal goals. Through this process, participants had to provide evidence of how their work contributed through the impact descriptor categories. Describing how research contributes to impact encourages reflection and learning about mobilising knowledge into action.

Another theme that emerged from feedback sessions involved questions about whether and how projects and programmes would be compared to one another. In quantifying the what and how in our analyses, we risk emphasising the number of impacts over the significance of the impacts. A project with nine impacts does not necessarily reflect more success than a project with three impacts. Those three impacts might mean more to societal partners or reflect a larger systemic change than any of the nine from the other project. While impact numbers can be useful in documenting and communicating impact, maintaining a qualitative, narrative description that conveys the context and significance of the project and its impacts is crucial to the mission of strengthening engaged research practices and supporting positive changes in partnership with communities and other decision makers. This feedback reflects earlier comments about incorporating external partners’ definitions of success into assessment processes.

Discussion and conclusions

Impactful research is happening throughout universities, even at those without centralised impacts assessments. Developing systematic ways to capture the process, depth and breadth of these impacts helps demonstrate to researchers, funders and societal stakeholders the ways that academia can contribute to positive societal outcomes. It provides avenues for those doing engaged work to receive greater recognition than is common in standard academic reward systems. The absence of societal impacts within current promotion, tenure and performance review processes hinders this work and penalises academics who invest in engaged scholarship (for example, Ozer et al., 2023). Even before it is formalised in reward and recognition structures, increasing impact literacy can help engaged scholars better describe and document their own work. Assessing impacts improves the practice of engaged scholarship by embedding reflective practice and evaluative mindsets into academic research, thus increasing positive impacts over time.

Several lessons from this pilot project have implications for the use of societal impacts assessments. We found that this proposed framework aligned well with the inter- and transdisciplinary research occurring within the institute. The impact descriptor categories effectively captured most of the types of impacts generated by the 12 pilot projects. The category that emerged from the data – impacts to higher education – could potentially be captured in future assessment using the ‘attitude and culture change impact’ described in Edwards and Meagher (2020). Similarly, the SDGs reflected the impact goals of most of the 12 projects reviewed here, although use of the SDGs requires familiarity with the goals and subgoals to be most effective. Using the SDGs to provide shared language and international-level goals makes the results of research impact evaluation relatable to a wider audience. But the SDGs are not the only worthy societal goals. Different organisations may develop their own sets of goals or impact priorities that provide more clarity and focus. Organisations should also aim to incorporate input and priorities from specific societal partners when developing their societal impact goals.

While the framework was largely successful in capturing the impacts evidence we sought, based on extensive feedback from the institute’s staff, we identified several weaknesses in our data collection strategy that have implications for instituting an impacts reporting process. First, despite our efforts, the online survey format did not provide sufficient support or explanation of the concepts to allow participants to use it effectively without guidance. Particularly in contexts where impacts evaluation is new, developing researchers’ knowledge and skills to identify, describe and document societal impacts requires practice and more emphasis on impacts literacy. In this study, we observed that participants struggled with differentiating outputs from impacts, and with demonstrating impacts using appropriate evidence. If researchers do not understand impacts or have the tools to assess them, it is impossible to capture accurate data (Bayley and Phipps, 2019a, 2019b). In future tests of the framework, we plan to use semi-structured interviews as the primary form of data collection to ensure more complete and robust responses. Another approach to embedding societal impacts concepts into research is to use this framework as a planning tool that researchers and programmes can use as they craft funding proposals and develop project plans; efforts to test this planning approach are also underway. Over time, as researchers gain more exposure to research impact evaluation, we anticipate an increase in impact literacy, which will improve the data collection process.

Second, we did not include our participants’ societal partners in this pilot project. We consider that gap a weakness, and we will seek to address it in future assessments. We asked participants to provide evidence of impact, but this leaves the narrative power in the hands of researchers. Inclusion of the experiences of societal partners – in their own words – would add richness to impact narratives and reveal additional impacts. Sometimes it may reveal examples where projects have not been as successful as anticipated, which can provide opportunities for reflection and recalibration of project goals and methods.

One way that we attempted to enrich impact assessment in our pilot study was by combining two types of assessment frameworks – linking the societal goals to which the research contributed (the ‘what’) and descriptors of changes and impacts that emerged directly from the research effort (the ‘how’). By linking the ‘how’ framework to the ‘what’ framework, researchers can contextualise their work as part of larger societal movements and explain how their work contributes to solving societal challenges. But the quantitative overview should not stand alone. The qualitative evidence of ‘how’ and ‘what’ makes this analysis even more meaningful, helps researchers, partners and funders understand the significance of the impacts, and mitigates against unhelpful project-to-project comparisons.

This study rests on the assumption that the academic research enterprise has a wealth of knowledge and skills that, in partnership with the knowledge and skills of societal partners, can help solve our most pressing social and environmental challenges. Whether we conceptualise the relationship between research and society as a contract between science and society (Lubchenco and Rapley, 2020) or simply as a shared responsibility to other humans and our environment, strengthening partnerships and societal contributions can be much more central to the research enterprise. Because people often work towards measurable goals, defining and documenting progress towards societal impacts plays an important role in closing the gaps between research and society. Embedding the concepts and language of societal impacts into the research enterprise moves us towards elucidating research impacts, increasing impact literacy and strengthening the practices of engaged scholarship and impactful research. This pilot evaluation framework and impacts tool provide one more step along that path.

Acknowledgements

The authors wish to thank the participants in this pilot project. The projects represented here are included due to the generosity of the researchers and project staff who shared their time, insights and reflections with us. We would also like to thank two anonymous reviewers who provided thoughtful, constructive and helpful feedback on this article.

Declarations and conflicts of interest

Research ethics statement

The authors declare that research ethics approval for this article was waivered by the University of Arizona Institutional Review Board.

Consent for publication statement

The authors declare that research participants’ informed consent to publication of findings – including photos, videos and any personal or identifiable information – was secured prior to publication. This study was classified as programme evaluation/quality improvement, and therefore did not require oversight from the Institutional Review Board. However, we assured participants that their responses would be de-identified and would remain anonymous in publications.

Conflicts of interest statement

The authors declare no conflicts of interest with this work. All efforts to sufficiently anonymise the authors during peer review of this article have been made. The authors declare no further conflicts with this article.

References

Adam, P; Ovseiko, PV; Grant, J; Graham, KE; Boukhris, OF; Dowd, A-M; Balling, GV; Christensen, RN; Pollitt, A; Taylor, M. (2018). ISRIA statement: Ten-point guidelines for an effective process of research impact assessment. Health Research Policy and Systems 16 (1) : 8. DOI: http://dx.doi.org/10.1186/s12961-018-0281-5

Advisory Committee for Environmental Research and Education. Engaged Research for Environmental Grand Challenges: Accelerating discovery and innovation for societal impacts. A report of the NSF Advisory Committee for Environmental Research and Education. National Science Foundation. Accessed 21 February 2023 https://nsf-gov-resources.nsf.gov/2022-12/Engaged-research-for-environmental-grand-challenges-508c.pdf?VersionId=QwBICw1M0eQa6rawrjW7H4OmzWZhuImR.

Alvesson, M; Gabriel, Y; Paulsen, R. (2017). Return to Meaning: A social science with something to say. Oxford: Oxford University Press.

Andrews, K. (2022). Why I wrote an impact CV. Nature, February 1 2022 DOI: http://dx.doi.org/10.1038/d41586-022-00300-6

Arnott, JC; Kirchhoff, CJ; Meyer, RM; Meadow, AM; Bednarek, AT. (2020). Sponsoring actionable science: What public science funders can do to advance sustainability and the social contract for science. Current Opinion in Environmental Sustainability 42 : 38. DOI: http://dx.doi.org/10.1016/j.cosust.2020.01.006

Association of Public and Land-Grant Universities. Public Impact Research: Engaged universities making the difference. Washington, DC: Association of Public and Land-Grant Universities. Accessed 21 February 2023 https://www.aplu.org/our-work/2-fostering-research-innovation/public-impact-research/.

Bayley, J; Phipps, D. (2019a). Building the concept of research impact literacy. Evidence & Policy: A Journal of Research, Debate and Practice 15 (4) : 597. DOI: http://dx.doi.org/10.1332/174426417X15034894876108

Bayley, J; Phipps, D. (2019b). Extending the concept of research impact literacy: Levels of literacy, institutional role and ethical considerations. Emerald Open Research 1 (3) : 14. DOI: http://dx.doi.org/10.1108/EOR-03-2023-0005

Beard, TA; Donaldson, SI; Unger, JB; Allem, J-P. (2023). Examining tobacco-related social media research in government policy documents: Systematic review. Nicotine & Tobacco Research 26 (4) : 421. DOI: http://dx.doi.org/10.1093/ntr/ntad172

Beyond the Academy. Guidebook for the Engaged University: Best practices for reforming systems of reward, fostering engaged leadership, and promoting action-oriented scholarship, Keeler, BL, Locke, C C (eds.), . (2022). Accessed 21 February 2023 http://beyondtheacademynetwork.org/guidebook/.

Bornmann, L; Haunschild, R; Marx, W. (2016). Policy documents as sources for measuring societal impact: How often is climate change research mentioned in policy-related documents?. Scientometrics 109 (3) : 1477. DOI: http://dx.doi.org/10.1007/s11192-016-2115-y

Brink, C. (2018). The Soul of a University: Why excellence is not enough. Bristol: Policy Press.

Britton, J; Johnson, H. (2023). Community autonomy and place-based environmental research: Recognizing and reducing risks. Metropolitan Universities 34 (2) DOI: http://dx.doi.org/10.18060/26440

Chankseliani, M; McCowan, T. (2021). Higher education and the Sustainable Development Goals. Higher Education 81 (1) : 1. DOI: http://dx.doi.org/10.1007/s10734-020-00652-w

Chubb, J; Reed, MS. (2018). The politics of research impact: Academic perceptions of the implications for research funding, motivation and quality. British Politics 13 : 295. DOI: http://dx.doi.org/10.1057/s41293-018-0077-9

Creswell, JW; Poth, CN. (2016). Qualitative Inquiry and Research Design: Choosing among five approaches. Thousand Oaks, CA: Sage.

Cvitanovic, C; Howden, M; Colvin, R; Norström, A; Meadow, AM; Addison, P. (2019). Maximising the benefits of participatory climate adaptation research by understanding and managing the associated challenges and risks. Environmental Science & Policy 94 : 20. DOI: http://dx.doi.org/10.1016/j.envsci.2018.12.028

De Jong, S; Balaban, C; Holm, J; Spaapen, J. (2020). Redesigning research evaluation practices for the social sciences and humanities. Deeds and Days 73 : 17. DOI: http://dx.doi.org/10.7220/2335-8769.73.1

Drame, ER; Martell, ST; Mueller, J; Oxford, R; Wisneski, DB; Xu, Y. (2011). Engaged scholarship in the academy: Reflections from the margins. Equity & Excellence in Education 44 (4) : 551. DOI: http://dx.doi.org/10.1080/10665684.2011.614874

Edwards, DM; Meagher, LR. (2020). A framework to evaluate the impacts of research on policy and practice: A forestry pilot study. Forest Policy and Economics 114 101975 DOI: http://dx.doi.org/10.1016/j.forpol.2019.101975

Fazey, I; Bunse, L; Msika, J; Pinke, M; Preedy, K; Evely, AC; Lambert, E; Hastings, E; Morris, S; Reed, MS. (2014). Evaluating knowledge exchange in interdisciplinary and multi-stakeholder research. Global Environmental Change 25 : 204. DOI: http://dx.doi.org/10.1016/j.gloenvcha.2013.12.012

Foster, KM. (2010). Taking a stand: Community-engaged scholarship on the tenure track. Journal of Community Engagement and Scholarship 3 (2) : 20. DOI: http://dx.doi.org/10.54656/GTHV1244

Greenhalgh, T; Raftery, J; Hanney, S; Glover, M. (2016). Research impact: A narrative review. BMC Medicine 14 (1) : 78. DOI: http://dx.doi.org/10.1186/s12916-016-0620-8

Greenwood, DJ; Levin, M. (2007). Introduction to Action Research. 2nd ed Thousand Oaks, CA: Sage.

Gunn, A; Mintrom, M. (2017). Evaluating the non-academic impact of academic research: Design considerations. Journal of Higher Education Policy and Management 39 (1) : 20. DOI: http://dx.doi.org/10.1080/1360080X.2016.1254429

Hourneaux Junior, F. (2021). Editorial: The research impact in management through the UN’s sustainable development goals. RAUSP Management Journal 56 (2) : 150. DOI: http://dx.doi.org/10.1108/RAUSP-04-2021-252

Hudson, CG; Knight, E; Close, SL; Landrum, JP; Bednarek, A; Shouse, B. (2023). Telling stories to understand research impact: Narratives from the Lenfest Ocean Program. ICES Journal of Marine Science 80 (2) : 394. DOI: http://dx.doi.org/10.1093/icesjms/fsac169

Jagannathan, K; Arnott, JC; Wyborn, CA; Klenk, N; Mach, KJ; Moss, RH; Sjostrom, KD. (2020). Great expections? Reconciling the aspiration, outcome, and possibility of co-production. Current Opinion in Environmental Sustainability 42 : 22. DOI: http://dx.doi.org/10.1016/j.cosust.2019.11.010

Jasanoff, S; Wynne, B. (1998). Science and decisionmaking In: Rayner, S, Malone, EL EL (eds.), Human Choice and Climate Change. Vol. 1 Columbus: Battelle Press, pp. 1.

Jensen, EA; Reed, M; Jensen, AM; Gerber, A. (2021). Evidence-based research impact praxis: Integrating scholarship and practice to ensure research benefits society. Open Research Europe 1 : 137. DOI: http://dx.doi.org/10.12688/openreseurope.14205.2

Louder, E; Wyborn, C; Cvitanovic, C; Bednarek, AT. (2021). A synthesis of the frameworks available to guide evaluations of research impact at the interface of environmental science, policy and practice. Environmental Science & Policy 116 : 258. DOI: http://dx.doi.org/10.1016/j.envsci.2020.12.006

Lubchenco, J; Rapley, C. (2020). Our moment of truth: The social contract realized?. Environmental Research Letters 15 (11) 110201 DOI: http://dx.doi.org/10.1088/1748-9326/abba9c

Mach, KJ; Lemos, MC; Meadow, AM; Wyborn, C; Klenk, N; Arnott, JC; Ardoin, NM; Fieseler, C; Moss, RH; Nichols, L; Stults, M; Vaughan, C; Wong-Parodi, G. (2020). Actionable knowledge and the art of engagement. Current Opinion in Environmental Sustainability 42 : 30. DOI: http://dx.doi.org/10.1016/j.cosust.2020.01.002

Meadow, AM; Owen, G. (2021). Planning and Evaluating the Societal Impacts of Climate Change Research Projects: A guidebook for natural and physical scientists looking to make a difference. Tucson: University of Arizona, DOI: http://dx.doi.org/10.2458/10150.658313

Meagher, LR; Martin, U. (2017). Slightly dirty maths: The richly textured mechanisms of impact. Research Evaluation 26 (1) : 15. DOI: http://dx.doi.org/10.1093/reseval/rvw024

Meagher, L; Lyall, C; Nutley, S. (2008). Flows of knowledge, expertise and influence: A method for assessing policy and practice impacts from social science research. Research Evaluation 17 (3) : 163. DOI: http://dx.doi.org/10.3152/095820208X331720

Modern Language Association of America. Guidelines for Evaluating Publicly Engaged Humanities Scholarship in Language and Literature Programs. New York: Modern Language Association of America. Accessed 21 February 2024 https://www.mla.org/content/download/187094/file/Guidelines-Evaluating-Public-Humanities.pdf.

Morton, S. (2015). Progressing research impact assessment: A “contributions” approach. Research Evaluation 24 (4) : 405. DOI: http://dx.doi.org/10.1093/reseval/rvv016

Muhonen, R; Benneworth, P; Olmos-Peñuela, J. (2020). From productive interactions to impact pathways: Understanding the key dimensions in developing SSH research societal impact. Research Evaluation 29 (1) : 34. DOI: http://dx.doi.org/10.1093/reseval/rvz003

Oliver, K; Innvar, S; Lorenc, T; Woodman, J; Thomas, J. (2014). A systematic review of barriers to and facilitators of the use of evidence by policymakers. BMC Health Services Research 14 (1) : 2. DOI: http://dx.doi.org/10.1186/1472-6963-14-2

Owen, G. (2020). What makes climate change adaptation effective? A systematic review of the literature. Global Environmental Change 62 102071 DOI: http://dx.doi.org/10.1016/j.gloenvcha.2020.102071

Owen, G. (2021). Evaluating socially engaged climate research: Scientists’ visions of a climate resilient US Southwest. Research Evaluation 30 (1) : 26. DOI: http://dx.doi.org/10.1093/reseval/rvaa028

Ozer, EJ; Renick, J; Jentleson, B; Maharramli, B. (2023). Scan of Promising Efforts to Broaden Faculty Reward Systems to Support Societally-Impactful Research. Washington, DC: Pew Charitable Trusts: Transforming Evidence Funders Network. Accessed 21 February 2024 https://www.pewtrusts.org/en/research-and-analysis/white-papers/2023/10/universities-take-promising-steps-to-reward-research-that-benefits-society.

Pedersen, DB; Grønvad, JF; Hvidtfeldt, R. (2020). Methods for mapping the impact of social sciences and humanities – A literature review. Research Evaluation 29 (1) : 4. DOI: http://dx.doi.org/10.1093/reseval/rvz033

Pelz, DC. (1978). Some expanded perspectives on use of social science in public policy In: Yinger, J, Cutler, SS SS (eds.), Major Social Issues: A multidisciplinary view. New York: The Free Press, pp. 346.

Penfield, T; Baker, MJ; Scoble, R; Wykes, MC. (2014). Assessment, evaluations, and definitions of research impact: A review. Research Evaluation 23 (1) : 21. DOI: http://dx.doi.org/10.1093/reseval/rvt021

Rickards, L; Steele, W; Kokshagina, O; Moraes, O. (2020). Research Impact as Ethos. Melbourne: RMIT University.

Sianes, A; Vega-Muñoz, A; Tirado-Valencia, P; Ariza-Montes, A. (2022). Impact of the Sustainable Development Goals on the academic research agenda: A scientometric analysis. PLoS ONE 17 (3) e0265409 DOI: http://dx.doi.org/10.1371/journal.pone.0265409

Smit, JP; Hessels, LK. (2021). The production of scientific and societal value in research evaluation: A review of societal impact assessment methods. Research Evaluation 30 (3) : 323. DOI: http://dx.doi.org/10.1093/reseval/rvab002

Spaapen, J; Van Drooge, L. (2011). Introducing “productive interactions” in social impact assessment. Research Evaluation 20 (3) : 211. DOI: http://dx.doi.org/10.3152/095820211X12941371876742

Spaapen, J; Van Drooge, L; Propp, T; Van der Meulen, B; Shinn, T; Marcovich, A; Van den Besselaar, P; de Jong, S; Barker, K; Cox, D; Morrison, K; Sveinsdottir, T; Pearson, D; D’Ippolito, B; Prins, A; Molas-Gallart, J; Tang, P; Castro-Martinrz, E. (2011). SIAMPI Final Report, Accessed 21 February 2024 http://www.siampi.eu/Content/SIAMPI_Final%20report.pdf.

Stern, N. (2016). Building on Success and Learning from Experience: An independent review of the Research Excellence Framework, Accessed 21 February 2024 https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/541338/ind-16-9-ref-stern-review.pdf.

Tseng, V; Bednarek, A; Faccer, K. (2022). How can funders promote the use of research? Three converging views on relational research. Humanities and Social Sciences Communications 9 (1) : 1. DOI: http://dx.doi.org/10.1057/s41599-022-01157-w

VanderMolen, K; Meadow, AM; Horangic, A; Wall, TU. (2020). Typologizing stakeholder information use to better understand the impacts of collaborative climate science. Environmental Management 65 : 178. DOI: http://dx.doi.org/10.1007/s00267-019-01237-9

Wall, T; Meadow, A; Horangic, A. (2017). Developing evaluation indicators to improve the process of coproducing usable climate science. Weather, Climate, and Society 9 (1) : 95. DOI: http://dx.doi.org/10.1175/WCAS-D-16-0008.1

Watermeyer, R; Chubb, J. (2019). Evaluating “impact” in the UK’s Research Excellence Framework (REF): Liminality, looseness and new modalities of scholarly distinction. Studies in Higher Education 44 (9) : 1554. DOI: http://dx.doi.org/10.1080/03075079.2018.1455082

Weiss, C. (1973). The politics of impact measurement. Policy Studies Journal 1 (3) : 179. DOI: http://dx.doi.org/10.1111/j.1541-0072.1973.tb00095.x

Willson, RB. (2022). Identifying and leveraging collegial and institutional supports for impact In: Kelly, W (ed.), The Impactful Academic: Building a research career that makes a difference. Bingley: Emerald, pp. 13.

Woolley, R; Molas-Gallart, J. (2023). Research impact seen from the user side. Research Evaluation 32 (3) : 591. DOI: http://dx.doi.org/10.1093/reseval/rvad027

Impact category definitions, number of projects that demonstrated each category, and an example from the study

| Impact category | Definition | Number of projects | Example |

|---|---|---|---|

| Instrumental | Use of research to inform decisions, plans or policies | 10 | In their annual planning document, an electric utility company indicated their preference for an energy portfolio to reduce emissions the fastest for lowest cumulative total. This preference, based on research findings, was a departure from previous selections. |

| Higher education | Use of research to inform decision, plans or policies within higher education | 1 | An output of this project was the production of culturally appropriate curriculum materials, which were then incorporated directly into courses. |

| Connectivity | New or strengthened relationships, partnerships or networks | 9 | This project strengthened connections with a federal government agency. Workshops also connected people within the agency who were not yet working together, particularly employees who worked in different regions of the US. |

| Conceptual | Changes in knowledge about, or awareness of, an issue | 11 | A utility company wanted to better understand the influence of temperature and precipitation on local river streamflow. Research provided new insight about the impact that heat, and cool-season and monsoon precipitation, have on these river systems. |

| Capacity building | Changes in skills, expertise or resources of project partners | 8 | 60 community college students strengthened skills in applying traditional knowledge to develop culturally appropriate solutions for environmental and social challenges. These efforts reinforced their confidence to pursue four-year degrees and seek work in Tribal agencies. |

| Higher education | Increasing the capacity within higher education to undertake engaged and impactful research | 1 | Through its successful collaborations with Indigenous communities, this project contributed to the hiring of new Indigenous faculty members. |

| Social | Improved social outcomes for partners or broader communities | 2 | A project collaboration with an Indigenous health department addressed uranium and coal mining impacts. 61 per cent of farmers in the area said they stopped farming due to a mine spill. Those who had resumed farming cited attending a project teach-in as a primary reason. |

| Environmental | Improved environmental conditions | 2 | Research and outreach led to efforts to eradicate buffelgrass in several locations around Pima County, AZ. These efforts helped to slow the spread of this invasive species and reduce the fire risk it brings. |