Introduction

A report by the Organisation for Economic Co-operation and Development (OECD, 2013: 469–70) has recommended greater use of peer learning among schools to develop capacity for self-evaluation and improvement, particularly in systems where schools have a high degree of autonomy, in order to prevent them from forming an introspective and defensive culture. School peer reviews are evaluations carried out by school leaders or other school practitioners, of whole schools, parts of schools (such as departments, curriculum areas or year groups) or aspects of practice, for example, mastery learning, feedback and assessment, use of technology, or helping students with special educational needs. Schools collaborate with other schools in networks (permanent organizational structures), partnerships (usually local collaborations around specific areas) and clusters (small groups for the purpose of conducting mutual reviews, sometimes subsets of networks or partnerships) to collect and analyse data in review visits. Visiting review teams provide feedback to the school (initially verbally) and often a report is produced for internal use, to summarize the findings and to give recommendations for improvement.

School peer reviews can provide feedback, critical friendship, validation of the school’s self-evaluation or support fellow schools’ improvement efforts. Some peer review programmes are structured, facilitated and accredited by external agencies for a subscription fee, such as Challenge Partners or the Schools’ Partnership Programme in England; others by districts, states or local authorities, such as Queensland and New South Wales in Australia; and others in school networks who devise their own schemes for peer review (some multi-academy trusts in England do this: these are formal organizations with a central trust that coordinates a group of academies, that is, publicly funded independent schools). The peer review activity can be supported and structured within a school improvement programme and can sit within an evaluation cycle of a network of schools. External evaluation criteria may be used in the conduct of reviews, or the peer review may use its own unique framework. However, there is usually no obligation on schools to publish reports from peer reviews, as their purpose is to help in their improvement efforts, rather than being an outward-facing accountability measure.

Peer reviews can empower school leaders and teaching professionals, giving them a voice that has diminished in many countries through de-professionalization and standardization (Sahlberg, 2011). Some authors have suggested that they can strengthen moral, professional and lateral accountability in a landscape often dominated by centralized, top-down and market-oriented accountability (Gilbert, 2012). Peer review programmes are also said to link powerful professional development of school leaders directly to the school improvement process (Matthews and Headon, 2015).

The rise of peer learning in some countries has also gone hand in hand with an ontology of research-to-practice that views teachers as technicians, divorced from the work of academics in the production of empirical evidence and the exploration of educational theory. This, accompanied by high-stakes accountability, means that such forms of collaborative peer work can become little more than a form of self-policing (Greany, 2020). Therefore, the article argues for the use of the nomenclature collaborative peer enquiry (CPE) as a way of opening up thinking about how to use more empowering processes that provide opportunities for school leaders and teachers to transform their practices and curricula together.

This article begins by outlining some broad traditions of collaborative action research. Using the typology devised by Foreman-Peck and Murray (2008), the extent to which distinct peer review programmes exhibit forms of practical philosophy, professional learning or critical social science are explored. Using this framework and the work of other scholars, the potential for these programmes to display authentic features of CPE is explored.

Background

The development of peer review programmes can be seen in the context of a perceived need to rebalance the ‘drivers of change’ towards lateral and professional accountability and away from (perceived to have failed) top-down forms of policy implementation (Munby and Fullan, 2016). In their think piece, Munby and Fullan (ibid.) argue that what is described as autonomy for schools is often no more than the power to choose how to operate more efficiently, rather than having the power to set their own criteria for what is considered high-quality education. Referring to the school improvement landscape in England, and in some other OECD countries, Barber (2004) suggested a shift from informed prescription in the 1990s to informed professional judgement in the 2000s. He suggested that in a maturing system, the locus of responsibility would shift more on to teachers and school leaders to drive improvement. While not specifically promoting peer review, he said that this period would need to include sharper, more intelligent forms of accountability.

Some systems, such as in England, Wales and Australia, are beginning to embed peer reviews to the extent that they have become a routine part of the internal evaluation process for many schools. Peer reviews have been operating for a longer period in the further education sector, including a programme of trans-European reviews using sector-wide protocols and standards (Gutknecht-Gmeiner, 2013). The growth of peer review in England has also led the National Association of Head Teachers to conduct an exercise with industry experts to set out standards for effective school-to-school peer review (NAHT, 2019).

In England, Challenge Partners was a pioneering organization, emerging from the London Challenge programme from 2002–11. This includes a collaborative model where ‘stronger’ schools support ‘weaker’ ones within pre-existing networks, such as in Teaching Schools Alliances (TSAs) – specially designated teaching schools, based loosely on the teaching hospitals model, with a role in teacher training and school improvement, are the hub of a loose network of schools called a Teaching Schools Alliance. This model of collaboration has been shown to be effective in improving student attainment (Chapman and Muijs, 2014). Other big networks have subsequently formed in England, notably the Schools Partnership Programme (SPP), through which approximately 1,300 schools have been engaged since its birth in 2014 (Education Development Trust, 2020). Survey data from an ongoing evaluation by the Education Endowment Foundation (EEF) of the SPP (Anders et al., forthcoming, 2022), shows a trend towards growth in uptake of peer review in schools in England in the last decade. Of the 339 primary schools surveyed in June 2018, a third of the sample (111 schools) said that they had been involved in a peer review programme other than SPP over the two years prior to the survey.

Despite often being referred to as ‘peer review’ programmes, Challenge Partners and the SPP are, in fact, multifaceted school improvement programmes, with a number of strands of activity. In the case of the SPP, help was enlisted from distinguished scholars such as Michael Fullan and Viviane Robinson to develop their model (for example, Ettinger et al., 2020). This programme exhibits many of the eight features of effective networks outlined by Rincón-Gallardo and Fullan (2016), particularly the development of strong relationships of trust and internal accountability; continuous improvement through cycles of collaborative enquiry; and schools connecting outwards to learn from others.

What is action research?

Before making a closer analysis of peer review models and their ‘fit’ to more calibrated definitions of action research, a broad definition for action research can be taken as:

a form of practitioner research that is carried out by professionals into a practice problem that they themselves are in some way responsible for. Unlike other forms of practitioner research, which involve studying a situation in retrospect, action research involves a process part of which is carried out simultaneously with taking action with the intention of improving a situation. (Foreman-Peck and Murray, 2008: 146)

While there is some debate within the field about what constitutes ‘proper’ action research, and this will be discussed further below, a core feature is the use of a cyclical model involving stages of action and reflection on this action. Carr and Kemmis (1986), for instance, propose cycles of planning, action, observation, reflection, leading to a new cycle of action. Action research can involve a wide range of methods, including classroom observation, questionnaires, research logs and interviews (Foreman-Peck and Murray, 2008: 146). This lack of alignment to particular methods leads the same authors to state that action research is not a methodology, something that is strongly disputed by others (for example, Somekh, 2005).

If we look at peer review, it stands up well to the above general definition: reviews are usually cyclical, they evaluate actions in process, and they involve a focus of enquiry and a commitment to taking action in response to the learning taking place from the review. Peer reviews use multiple forms of enquiry and data collection, including classroom observation, interviews, analysis of policy documents and students’ work, interviews with staff, students and other stakeholders. They can also include analysis of quantitative data, such as student progress and achievement.

Action research is a practice in itself, a ‘meta-practice’, that is, a practice-based practice (Kemmis, 2009). Specifically, it involves changing practices and people’s understanding of these practices, as well as the conditions under which they practice (ibid.: 464). By drawing on Habermas’s theory of knowledge constitutive interests – that social life is structured by language, work and power (ibid.) – Kemmis identifies three kinds of action research: ones guided by an interest in changing outcomes (technical action); ones guided by an interest in illuminating a problem of practice; and ones guided by the desire to emancipate people from injustice (ibid.: 469). Put in similar terms, Foreman-Peck and Murray (2008) define three major approaches to action research, using which this paper conducts an analysis of four peer review programmes: (1) action research as a form of professional learning; (2) action research as a practical philosophy; and (3) action research as a critical social science.

Type 1: Action research as a form of professional learning

In action research as a form of professional learning, the focus of research is on trialling solutions to practical problems, collecting data, analysing and evaluating. An example of this is the Best Practice Research Scholarship (BPRS) programme for ‘teacher-scholars’, evaluated by Furlong et al. (2014). The reports produced by the teachers were often seen as accounts of highly effective professional learning, rather than the publication of findings that could be generalized to other contexts. Furlong and colleagues’ (ibid.) evaluation stated that this research was an example of Mode 2 knowledge production (Gibbons et al., 1994), that is, it was context- and time-based, with a problem-solving orientation. Unlike traditional academic (Mode 1) knowledge, these reports tended not to be written up in a formal manner suitable for publication in academic journals; instead, the knowledge generated from enquiries lived in the minds of those taking part in the research and in their approaches to practice.

Type 2: Action research as a practical philosophy

Action research as a practical philosophy is aimed at researching practice in collaboration with others, with the aim of aligning it more acceptably with educational values and theories. Through self-evaluation, and reflection on evidence and educational aims, curriculum initiatives are developed, with the aim of realizing the educational goals and principles of the school community. The consideration of participants’ underlying educational values is as important as the evaluation of the relative success of particular interventions.

Type 3: Action research as a critical social science

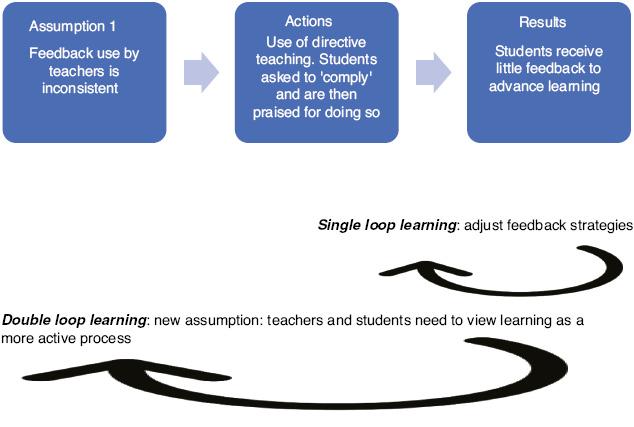

In action research as a critical social science, critical theorizing has an emancipatory intent – to consciously identify power imbalances and surface them in order to reveal barriers to social change. All participants are researchers, and knowledge is seen to develop through the construction and reconstruction of theory and practice by those involved. This form of action research is less narrowly focused on local problem-solving, since it is viewed that an instrumentalist approach may perpetuate problems by failing to acknowledge their root causes, offering a ‘sticking plaster’ solution. Related to this is the concept of ‘double loop learning’ (Argyris, 1977). Unlike single loop learning, where new actions are refinements of previous strategies and based on the same fundamental understanding, in double loop learning, a new, revised (and deeper) conception of the problem is developed; successive cycles involve the transformation of the individuals involved through the generation of new knowledge and understanding.

A role for collaborative peer enquiry in the research-engaged school ecosystem

One of the perennial challenges of school improvement efforts has been to mobilize knowledge from evidence so that practices and policies can be modified accordingly and more effectively to achieve desired educational ends.

Broadly speaking, the kind of knowledge that can be brought to enhance practice is either school-based data or formal research conducted by academics or practitioners. School-based data is concerned with evidence about student outcomes at national, district and school level that can be broken down by gender, race, socio-economic status, students with additional learning needs, or those starting with non-native level in the first language of instruction and so on. The ability to analyse this deluge of data requires a high degree of evaluation literacy and analytical skill. Furthermore, many issues are ‘wicked problems’ (Rittel and Webber, 1974), that is, ones for which there is no shared idea for what the problem consists of or what a solution might look like. This means they require the consultation and engagement of a wide range of stakeholders and extensive evidence gathering. However, high-stakes accountability can suppress the type of enquiry and transparency of working needed to resolve such complex problems as reducing attainment gaps between richer and poorer students or tackling school exclusions. Instead, schools are motivated to emulate competitor schools that have achieved acclaim through good inspection results. Such isomorphism leads to less rather than more innovation, and it is often accompanied by game playing and a defensive desire to ‘prove’ they are at the level needed to be judged well in external evaluations (Ehren, 2019). Peer review models that more closely resemble approaches used for external accountability may compound these issues instead of providing an alternative.

The second kind of knowledge that can be used in school improvement comes from formal educational research. Bringing this into the ambit of schools has long been the subject of policy- and academic-level discussion about how to make teaching a more evidence-based or evidence-informed profession (for example, Hargreaves, 2007). The advantages of bringing formal research closer to school practices and policies are multiple and have been widely discussed elsewhere (for example, Brown, 2013; Godfrey, 2016; Nutley et al., 2008; Stoll, 2015). These include the identification of teaching and leadership strategies more likely to lead to gains in student achievement; the understanding of the ‘science’ of implementation of such strategies; the illumination of problems so that they can be better understood (and thus better addressed); the creation of a shared professional and academically underpinned language of practice; and breaking down – or breaking open – complex issues through the application of theoretical lenses derived from sociology, cognitive science, philosophy and economics (to name a few).

The introduction of freely accessible evidence syntheses in recent years, notably in the USA, New Zealand and England, has given an additional source for practitioners on which to base their decision making. Innovative resources, such as the Education Endowment Foundation’s teaching and learning toolkit (EEF, 2020), have equipped practitioners with a summary of international evidence about strategies that best enhance student attainment. The Campbell Collaboration in the USA (Campbell Collaboration, 2020) and the Iterative Best Evidence Synthesis in New Zealand (Education Counts, 2020) offer similar functions. However, in these two countries, the ‘research to practice’ narrative is dominated by a narrow ‘what works’ agenda, which focuses on the identification of best strategies for raising attainment but ignores many of the other advantages of illumination and application of theory mentioned above. The what works model tends to divide the research and teaching communities of practice (Farley-Ripple et al., 2018) and disempowers practitioners through prescriptive ‘evidence-based’ policymaking (Godfrey, 2017). In this professional technical rationalism (Schön, 2001), teachers and school leaders eschew their critical or active role in the development or interpretation of the evidence base.

What is proposed here, then, is the encouragement of a more active role for practitioners in ‘research-informed practice’, that is:

An actively enquiring mode of professionalism that involves critical reflection and engagement in (‘doing’) and with (‘using’) academic and practitioner forms of research, taking into account both the findings and theories generated from them. (Godfrey, 2017: 438)

While most peer review programmes focus extensively on the collection and analysis of school-based data, it is in the incorporation of academic knowledge that most are still lacking. They therefore do not fully promote the cultures for the kind of research-engaged schools that can contribute to an effective school ecosystem (Godfrey and Handscomb, 2019) (see Table 1). Research-engaged schools promote active engagement in and with research and base decisions on a wide array of knowledge and evidence; staff are connected with colleagues in other schools and institutions, including ones engaged in the production and dissemination of research.

Key characteristics of research-engaged schools

| Features of research-engaged schools (Handscomb and MacBeath, 2003; Sharp et al., 2005; Wilkins, 2011) | Dimensions | |

| 1) | Promotes practitioner research among its staff | Research-informed professional practice |

| 2) | Encourages its staff to access, read, use and engage critically with published research | |

| 3) | Uses research to inform its decision making at every level | The school as a learning organization |

| 4) | Welcomes being the subject of research by outside organizations | Connectivity to the wider system |

| 5) | Has ‘an outward-looking orientation’ | |

Source: adapted from Godfrey and Handscomb (2019: 9)

It is within the above conception of a research-engaged school system that this article proposes collaborative peer enquiry (CPE) as a potentially powerful solution, defined as:

A programme of mutual or reciprocal school review visits, agreed by a group of school leaders and involving a range of professionals and/or other stakeholders in developing or using their own evaluation focus and criteria, and who are committed to transforming practice through the collection of school-based evidence, in a process informed by both practitioner and academic knowledge.

Analysis of peer review programmes according to the major approaches to action research

This section applies action research typology (Foreman-Peck and Murray, 2008) – as a form of professional learning (called here type 1), as a practical philosophy (type 2) or as a critical social science (type 3) – to four models that can be broadly described as ‘peer review programmes’. The first two fall only partially within the above definition of CPE, while the last two are clearer examples of collaborative enquiry. Details about these models have been derived primarily from existing publications (especially Matthews and Headon, 2015; Godfrey and Brown, 2019b), as well as from chapters in an edited collection on peer review (Godfrey, 2020).

Example 1: Challenge Partners quality assurance programme

Like many peer review approaches, this involves phases of training for participants, scheduling of school visits, and analysis of pre-review data, followed by the school visit itself and then debriefing. The review visits take one-and-a-half days and include joint lesson observations and meetings with students and key staff. Visits are conducted by a review team of two to four senior leaders from other schools and are led by an inspector accredited by the Office for Standards in Education, Children’s Services and Skills (Ofsted, which is the national schools inspectorate in England). The host school can choose to identify an area of excellence to be validated by the review team. The reviewers base their judgements on their own professional experience and with reference to the Ofsted inspection criteria. Depending on the outcome of the visit, the school may be offered a leadership development day or other support service through a national brokering service. The report produced from the review is a collaborative process between all involved. There is an expectation that the school will share the report both internally and externally. The reviewers receive feedback on their role, which they also share with their own school as part of their professional development. After the review, it is expected that the school will incorporate the findings within its school improvement action plan. The school shares the outcome with other schools in its local hub in order to seek local support to resolve its needs or to offer support to others where reviewers see outstanding practice (Berwick, 2020).

Aspects of the Challenge Partners model exemplify the type 1 approach to action research. An evaluation of Challenge Partners (Matthews and Headon, 2015) highlighted the high-quality professional/leadership development gained by participants. This came about through leading and participating in reviews, and also through the training and interchange of professional dialogue. Of head teachers of reviewed schools, 84 per cent felt that reviews had been very useful to the professional development of their senior leaders, and over 90 per cent of head teachers felt that they had helped in planning school improvement (ibid.). Challenge Partners also collects examples of excellent practice, which, once validated by peers, can be shared on its subject directory, thereby offering an appreciative enquiry approach (Stavros et al., 2015). The role of lead reviewers was also viewed as a form of critical friendship from external, trained and (to varying degrees) impartial or objective outsiders.

However, this model gives limited explicit latitude for ‘open’ enquiry due to its reference to the inspection criteria, and it tends not to involve trialling out new ideas and evaluating them in the way that might be expected of the ‘professional learning’ category according to Foraman-Peck and Murray (2008). Where there is some wider learning gained by evaluating practice, this comes about to some extent as a (very useful) by-product of the review process and is not central to its focus.

Example 2: ‘Instead’ reviews run by the National Association of Head Teachers in 2015–16

In the context of high-stakes school inspections, ‘Instead’ reviews were designed by the National Association of Head Teachers (NAHT) to offer an alternative to Ofsted, and to strengthen the voice of head teachers (see Godfrey and Ehren, 2020). The Instead matrix consists of four focal areas: learning and teaching; pupils; community; and leadership. These are reviewed against the quality of schools’: vision and strategy; analysis; and delivery. Schools work in self-formed clusters of three or four, and review each other in turn in one school year. No grades are given in the review, rather the framework and report addresses: ‘actions needed and priorities’ and ‘what should be prioritized, developed, maximized and sustained?’ Lead reviewers from outside the cluster join the other head teachers for two-day review visits at each school during one school year. All lead reviewers have some prior experience of inspection or peer review.

Prior to each visit, the school to be reviewed completes the self-evaluation matrix, addressing ‘where they are at’ for each part of the grid, adding evidence and performance data that they send to the lead reviewer. They also decide on the focus of the visit. The report from each review is brought together by the lead reviewer in communication with the host school head teacher. The school has a right to reply after the reviewers have completed their report and given their findings. There is also a section in the report for the school to say what they have learned and to give their reactions to the review and how they felt about it.

In Instead reviews, features of type 2 action research as a practical philosophy were evident. First among these is the strong sense of moral commitment and purpose behind their collaboration. Interviews with participants in one case study cluster showed much discussion about shared educational values, particularly in trying to provide better schooling to children from vulnerable and disadvantaged backgrounds. The process of aligning practices with values was aided by the explicit focus on educational values and vision in the Instead review matrix. The lack of grading gave the review reports more of a narrative and qualitative focus, and the inclusion of reflections by the host school about the process gave a sense of mutual learning. Host schools were also able to engage in sometimes lengthy debate about the judgements arrived at by visiting teams about aspects of their school. This strong professional dialogue about the interpretation of aspects of the review data distinguishes it sharply from Ofsted inspections, in which only factual inaccuracies of their draft reports can be challenged by inspected schools.

However, while the NAHT model broadened discussion about educational values and ‘non-Ofsted’ criteria, one reviewer noted that head teachers sometimes reverted to inspection judgements based on their prior experience and training:

if I was an Ofsted inspector, the school would be at least ‘good’ with ‘outstanding’ features, and you are so far from ‘outstanding’, and these are the things you would need to do. So, they were talking in those terms. (Deputy head at Greenleigh Primary school (pseudonym), Godfrey and Ehren, 2020)

This internalization of Ofsted values, characteristic of the English education system, has been described elsewhere as the panoptic model of accountability, in which practitioners engage in surveillance on behalf of the system (Perryman et al., 2018). While, at times, head teachers found this policing role helpful – to put the host school senior leaders ‘on their mettle’ in preparation for future inspections – other comments by interviewees made it clear that in an open climate of discussion between peers, this was inappropriate and subversive of genuine professional dialogue. Thus, the claim for this as a type 2 form of action research is weakened – less by design, but rather in its enactment.

Example 3: Research-informed Peer Review

Research-informed Peer Review (RiPR) has been developed at UCL Institute of Education by the author and exemplifies participatory evaluation (Cousins and Earl, 1992) in its collaboration between researchers from UCL and school leaders and teachers. The explicit combination of practitioner and academic knowledge to drive the peer visits also makes this (and the SILP model, below) a clear example of CPE.

Designed to be an experimental form of peer review, RiPR consciously deviates from other models that use whole school general frameworks by having a specific focus for improving teaching and learning. Schools work in clusters of three in mutual school visits and attend workshops conducted at UCL Institute of Education. Working on a shared enquiry theme (in this case, feedback and assessment policies and practices), teams of three or four participants from each school read and discuss published academic work on the topic. In the second workshop, participants co-develop data-collection tools based on research, such as about effective feedback for classroom observations (for example, Hattie and Timperley, 2007).

Importantly, the model uses the principles of theory-engaged evaluation, in which the purpose of reviews is to make teachers’ theories of action visible (Robinson and Timperley, 2013). Using various learning approaches, including peer evaluations of school assessment policies and constructing a physical model to represent them, previously unstated values behind policies are made visible. In the review visits, data are collected on how teachers use feedback and their reasons behind this. Underpinning the approach is the recourse to the idea of policies as espoused theories of action (the ‘talk’) and practices as theories-in-use (the ‘walk’) (Robinson, 2018).

Through these learning experiences, in one primary school, their policies on feedback and assessment were revised. Having made visible the educational values behind their approaches, the review team shared these with their teaching staff, opening up the policy to debate and the joint construction of a new policy. The process of producing the revised policy was strongly indicative of type 2 action research; the new policy was more explicit about educational values and purposes, for instance, feedback was designed to:

-

develop the self-regulation and independence of learners, taking ownership of their learning and making improvements

-

communicate effectively with all learners to enable them to make improvements, ensuring all learners understand their feedback in the context of the wider learning journey

-

ensure all feedback given is needs-driven and personalized

-

ensure that feedback is only given when useful – constant feedback is less effective than targeted feedback

-

raise self-esteem and motivate learners. (Extract from school feedback and assessment policy, adapted from Godfrey and Brown, 2019b: 103)

Once the new policy document was devised, teaching staff were able to adopt new approaches to assessment that were trialled and evaluated. The emergent feedback and assessment policies formed the focus for the review visit at this school. Reviewers were able to observe and discuss with teachers and pupils how they had understood and were enacting them in practice.

Example 4: Schools Inquiring and Learning from Peers

In Chile, the author worked closely with the host team from the Catholic University of Valparaiso to implement a model that followed RiPR principles in nine publicly funded primary schools, three each in Valparaiso, Concepción and Santiago. While the process was facilitated, it was also conducted as a process of formal educational research (Cortez et al., 2020; Montecinos et al., 2020).

Compared to the previous RiPR experiences in England, in Chile, the reviews took on a more critical social science turn. Although, as in England, the initial focus was on feedback and assessment, this soon shifted to examining underlying issues that were historically embedded in the participating schools. Classroom observations showed that effective pupil feedback was often absent, due to the passive way that lessons positioned pupils in classes. Pupils were frequently referred to (by school leaders and teachers) as ‘vulnerable’, and some teachers worried that the kind of feedback that was aimed at challenging learners to progress further might not be received well. Interviews with both pupils and teachers showed a shared and somewhat impoverished view of learning, based on compliance – being on time, being quiet and doing the work.

This illumination of embedded practices was discussed in the review process between the university facilitators and the practitioners, and it was used to open up further discussion within the schools about the need to speak about learning differently. When interviewed at later professional learning sessions on site, some teachers said that to improve feedback they first needed to devise active lessons for students that gave opportunities for teacher–student and student–student dialogue (Montecinos et al., 2020). Similarly, school leaders confessed to insecurity about how to lead active professional learning of their teaching staff, so research teams from the university went in to schools to assist head teachers to devise richer learning experiences. The initial characterization of the problem in terms of feedback use by teachers became recognized as a deeper underlying problem, that is, in terms of how practitioners and students viewed themselves as ‘learners’. This clearly exemplifies type 3 action research and, in particular, double loop learning (see Figure 1).

Conclusions and discussion

By applying the typology of action research found in Foreman-Peck and Murray (2008), this article identifies variations in how peer review programmes are oriented towards either circumscribed or more open forms of enquiry. A summary of the findings is shown in Table 2.

Summary of analysis from four peer review programmes and their ‘fit’ to the action research typology found in Foreman-Peck and Murray (2008)

| Example of programme | Type 1 | Type 2 | Type 3 |

|---|---|---|---|

| Challenge Partners Quality Assurance programme | Strong professional learning, although evaluation and trialling of new approaches partly as a by-product | ||

| ‘Instead’ reviews run by NAHT in 2015–16 | Discussion of values proposed by model, but not always enacted in the way intended | ||

| RiPR | Explicit, structured focus on aligning practice with educational values and theories | ||

| SILP | Evidence of double loop learning as initial focus is redefined in relation to embedded practices |

The analysis above is designed to be illustrative, rather than to be a comprehensive analysis or comparison of each programme as a whole according to this typology; nor is each type necessarily mutually exclusive. Indeed, while the Challenge Partners model shows some signs of the ‘professional learning’ approach to action research, all the models described may to varying degrees evidence aspects of this type. The leadership development role of peer review is further discussed below, as this emerges as an especially salient finding in the research. Theoretical models also vary in implementation, influenced by how school leaders’ routines of practice have developed in response to the external accountability environment. The role of CPE in the accountability environment is also further examined below. The collaborative peer enquiry models above (RiPR and SILP) exhibit clear features of type 2 (practical philosophy) and type 3 (critical social science) forms of action research, which are less evident in the other models. These points have implications for everyday practice, in particular the extent to which school leaders and teachers have a voice in the problematization and co-creation of educational policy decisions and the development of school curricula. This may include a more activist stance, which at times may be, ‘adversarial to existing government policies’ (Foreman-Peck and Murray, 2008: 151). Further challenges and potential in the use of CPE are discussed at the end of this section.

Leadership development

The description of peer review programmes by participants as ‘the best professional development I’ve ever done’ has been seen in evaluations of the Schools Partnership Programme (Anders et al., forthcoming, 2022), and these have been echoed in reports of other peer reviews in Australia, the Czech Republic and Chile (Godfrey, 2020). Indeed, a strong case can be made for peer review as a signature pedagogy for school leaders. Signature pedagogies have a surface structure of processes for teaching and learning about the profession (for example, demonstration, sharing practice); a deep structure that involves assumptions about the best ways to impart knowledge and the most appropriate forms of knowledge; and an implicit structure, particularly the beliefs, values and moral underpinnings of practice (Shulman, 2005: 55). The power of peer review is in the sharing, demonstrating and evaluation of practice, illustrative of the surface structure of a signature pedagogy. However, in CPE models, the deep structure is more evident through the combination of practitioner and academic knowledge relevant to professional practice. Furthermore, and as illustrated in the cases above, the implicit structure is seen through the discussions about beliefs, values and underpinnings of practice (type 2 action research). The difference with CPE models is that they allow greater latitude for transforming practice to align with these professional values, compared to peer review models where the use of external evaluation criteria mean that these are already implicitly unarguable.

The debate here about external accountability – focused peer reviews versus CPE approaches – to some extent mirrors the one about what is considered ‘proper action research’ – in which concerns about narrow instrumental use are voiced, particularly from exponents of a critical social science approach (for example, Somekh, 2005; Kemmis, 2009). On the surface, many peer review programmes appear quite similar; most involve some form of training in how to conduct interviews and observations, to evaluate documentation and secondary data, and to write reports. These are all ‘research’ activities. But when the peer review process mimics an external inspection, is used as an interim assessment (between inspections) or is conducted in preparation for an anticipated inspection visit, the sense in which these can be described as a form of ‘enquiry’ is lost. And while these ‘mocksteds’ (rehearsals for Ofsted inspections) may still lead to professional learning, they do not promote an open process of enquiry, freely chosen and socially constructed by professional peers in dialogue.

The accountability dimension

In some countries, and notably in England, there has been a shift towards greater horizontal accountability, where school-to-school reviews exist in tandem with the vertical test-based and inspection accountability systems (Grayson, 2019: 25). Even in the enquiry-oriented models, there is still an accountability dimension. Although these do not always require formal reports, there are usually written records of some kind, and these can be circulated as widely as each school leader wishes, for instance, within their internal network, with governors, or even with parents or students, if deemed appropriate. In the case of the RiPR programme (see example 3 above), school leaders have also reported sharing the findings of their reviews with inspection teams as a way of providing the evidence that is underpinning strategies that they have introduced.

Schools are held accountable in a variety of ways, and the cases above provide examples of some of these. Earley and Weindling (2004) outline four accountability relationships in the school system:

-

moral accountability (to students, parents, the community)

-

professional accountability (to colleagues and others within the same profession)

-

contractual accountability (to employers or the government)

-

market accountability (to clients, to enable them to exercise choice).

Contractual and market forms of accountability tend to dominate in many school systems. The cases above, and others mentioned in an book edited by Godfrey (2020), provide further support for Gilbert (2012) who contents that peer reviews have the potential to increase professional and moral forms of accountability. The professional accountability dimension is particularly apparent, in which ‘teachers are accountable not so much to administrative authorities but primarily to their fellow teachers and school principals’ (Schleicher, 2018: 116). Many of the cases in the collection edited by Godfrey (2020) highlight how the moral dimension provides the overall rationale for school leaders’ decisions to work together with colleagues in peer review. However, in CPE models, this element may be stronger still, in that, the ability to select or construct one’s own evaluation criteria means that participants’ values are built into the process a priori, and not just in the analysis of a predetermined external framework.

Collaborative peer enquiry: Challenges and potential

While the CPE model does not necessitate the involvement of academic staff, the unique form of ‘critical friendship’ from those with a different perspective can add considerable value to school improvement initiatives (Swaffield and MacBeath, 2005). Academic staff may be best positioned to provide an overall framework and process for the enquiry. In the RiPR model, for instance, UCL Institute of Education staff provided a review of the literature on effective feedback, ran the workshops that gave the overall rationale for the evaluations, and explained how the concept of theories of action would underpin data collection and analysis. It may be possible to use targeted school staff who have been through the process to lead future reviews, but it would not be feasible to undertake this approach without the facilitation and structure provided by academics to begin with. The academics were able to provide an outsiders’ perspective to ‘force the local educator out of conventional roles and daily habits to assume new roles as inquiring investigators expected to raise probing questions that go beyond the taken-for-granted routines of school life’ (Sappington et al., 2010: 253). Additionally, a new language was introduced from academia and used among the school staff to talk about school evaluation, learning, feedback, theories of action and so on. On re-visit over a year later to participating schools (both in England and in Chile), this new terminology has been used by school leaders to describe their reflections on the process, and in their ongoing progress in implementing the changes that they had introduced in their school policies and practices.

It is noteworthy that in the SILP model in Chile, the close involvement of academics in the implementation and analysis of reviews may have been a factor in steering the learning towards a more overtly critical social science approach. The team from the Catholic University of Valparaiso added additional post-review discussions, led by their academics, and they visited the school later to help model and structure professional learning activities with teachers. Some additional research funding allowed them to justify devoting academics’ time to this. The payback was not only a series of publications, but also the creation of a test bed for a school improvement process that could, and has been, scaled up and adapted to the Chilean context. Without the incentive structures for both academics and school teams, it is harder to see how CPE models could be sustained over a longer time period, and their scale-up may have been limited compared to other approaches.

There is untapped potential to extend the community of ‘peers’ in the CPE process to other stakeholders, such as district-level advisers, parental representatives or student bodies. It may be a radical step too far for some schools to train up parents in school review, but the closer and more active involvement of key stakeholders could democratize the education process in some potentially interesting ways. Giving an active role to students as reviewers/researchers working alongside teachers would add another interesting dimension, and would provide the kind of participation in education often missing when schools claim to be acting on ‘student voice’ (Godfrey, 2011). These are challenging ideas for future work in CPE models. However, the rewards will exceed the costs if they succeed in providing a process that empowers participants in achieving agreed and sustained educational improvements.

Acknowledgements

I would like to thank Dr Ruth McGinity for her insightful and challenging feedback on a draft of this article.

Notes on the contributor

David Godfrey is Associate Professor in Educational Leadership at UCL Institute of Education and programme leader for the MA in Educational Leadership. In July 2017 David was acknowledged in the Oxford Review of Education as one of the best new educational researchers in the UK. His latest publication, edited with Chris Brown, is An Ecosystem for Research-Engaged Schools: Reforming education through research (Routledge, 2019).

References

Anders, J; Godfrey, D; Stoll, L; Greany, T; McGinity, R; Munoz-Chereau, B. (forthcoming, 2022). Evaluation of the Schools Partnership Programme. Education Endowment Foundation.

Argyris, C. (1977). Double loop learning in organizations. Harvard Business Review 55 (5) : 115–25. Online. https://hbr.org/1977/09/double-loop-learning-in-organizations . accessed 28 August 2020

Barber, M. (2004). The virtue of accountability: System redesign, inspection and incentives in the era of informed professionalism. Journal of Education 185 (1) : 7–38, Online. DOI: http://dx.doi.org/10.1177/002205740518500102

Berwick, G. (2020). The development of a system model of peer review and school improvement: Challenge Partners In: Godfrey, D (ed.), School Peer Review for Educational Improvement and Accountability: Theory, practice and policy implications. Cham: Springer, pp. 159–80.

Brown, C. (2013). Making Evidence Matter: A new perspective for evidence-informed policy making in education. London: Institute of Education Press.

Campbell Collaboration. Better evidence for a better world, Online. https://campbellcollaboration.org/better-evidence.html . accessed 28 August 2020

Carr, W; Kemmis, S. (1986). Becoming Critical: Education, knowledge and action research. Basingstoke: Falmer Press.

Chapman, D; Muijs, D. (2014). Does school-to-school collaboration promote school improvement? A study of the impact of school federations on student outcomes. School Effectiveness and School Improvement 25 (3) : 351–93, Online. DOI: http://dx.doi.org/10.1080/09243453.2013.840319

Cortez, M; Campos, F; Montecinos, C; Rojas, J; Peña, M; Gajardo, J; Ulloa, J; Albornoz, C. (2020). Changing school leaders’ conversations about teaching and learning through a peer review process implemented in nine public schools in Chile In: Godfrey, D (ed.), School Peer Review for Educational Improvement and Accountability: Theory, practice and policy implications. Cham: Springer, pp. 245–66.

Cousins, J.B; Earl, L.M. (1992). The case for participatory evaluation. Educational Evaluation and Policy Analysis 14 (4) : 397–418, Online. DOI: http://dx.doi.org/10.3102/01623737014004397

Earley, P; Weindling, D. (2004). Understanding School Leadership. London: SAGE Publications.

Education Counts. BES (Iterative Best Evidence Synthesis), Online. www.educationcounts.govt.nz/publications/series/2515 . accessed 28 August 2020

Education Development Trust. The Schools Partnership Programme, Online. www.educationdevelopmenttrust.com/our-research-and-insights/case-studies/the-schools-partnership-programme . accessed 28 August 2020

EEF (Education Endowment Foundation). Teaching and learning toolkit, Online. https://educationendowmentfoundation.org.uk/evidence-summaries/teaching-learning-toolkit . accessed 28 August 2020

Ehren, M.C. (2019). Accountability structures that support school self-evaluation, enquiry and learning In: Godfrey, D, Brown, C C (eds.), An Ecosystem for Research-Engaged Schools: Reforming education through research. London: Routledge, pp. 41–56.

Ettinger, A; Cronin, J; Farrar, M. (2020). Education Development Trust’s Schools Partnership Programme: A collaborative school improvement movement In: Godfrey, D (ed.), School Peer Review for Educational Improvement and Accountability: Theory, practice and policy implications. Cham: Springer, pp. 181–98.

Farley-Ripple, E; May, H; Karpyn, A; Tilley, K; McDonough, K. (2018). Rethinking connections between research and practice in education: A conceptual framework. Educational Researcher 47 (4) : 235–45, Online. DOI: http://dx.doi.org/10.3102/0013189X18761042

Foreman-Peck, L; Murray, J. (2008). Action research and policy. Journal of Philosophy of Education 42 (Supplement 1) : 145–63, Online. DOI: http://dx.doi.org/10.1111/j.1467-9752.2008.00630.x

Furlong, J; Menter, I; Munn, P; Whitty, G; Hallgarten, J; Johnson, N. (2014). Research and the Teaching Profession: Building the capacity for a self-improving education system: Final report of the BERA-RSA Inquiry into the role of research in teacher education. London: British Educational Research Association.

Gibbons, M; Limoges, C; Nowotny, H; Schwartzman, S; Scott, P; Trow, M. (1994). The New Production of Knowledge: The dynamics of science and research in contemporary societies. London: SAGE Publications.

Gilbert, C. (2012). Towards a Self-Improving System: The role of school accountability. Nottingham: National College for School Leadership. Online. https://dera.ioe.ac.uk/14919/1/towards-a-self-improving-system-school-accountability-thinkpiece%5B1%5D.pdf . accessed 28 August 2020

Godfrey, D. (2011). ‘Enabling students’ right to participate in a large sixth form college: Different voices, mechanisms and agendas In: Czerniawski, G, Kidd, W W (eds.), The Student Voice Handbook: Bridging the academic/practitioner divide. Bingley: Emerald Group Publishing, pp. 237–48.

Godfrey, D. (2016). Leadership of schools as research-led organisations in the English educational environment: Cultivating a research-engaged school culture. Educational Management Administration and Leadership 44 (2) : 301–21, Online. DOI: http://dx.doi.org/10.1177/1741143213508294

Godfrey, D. (2017). What is the proposed role of research evidence in England’s “self-improving” school system. Oxford Review of Education 43 (4) : 433–46, Online. DOI: http://dx.doi.org/10.1080/03054985.2017.1329718

Godfrey, D (ed.), . (2020). School Peer Review for Educational Improvement and Accountability: Theory, practice and policy implications. Cham: Springer.

Godfrey, D, Brown, C C (eds.), . (2019a). An Ecosystem for Research-Engaged Schools: Reforming education through research. London: Routledge.

Godfrey, D; Brown, C. (2019b). Innovative models that bridge the research-practice divide: Research learning communities and research-informed peer review In: Godfrey, D, Brown, C C (eds.), An Ecosystem for Research-Engaged Schools: Reforming education through research. London: Routledge, pp. 91–107.

Godfrey, D; Ehren, M. (2020). Case study of a cluster in the National Association of Headteachers’ “Instead” peer review in England In: Godfrey, D (ed.), School Peer Review for Educational Improvement and Accountability: Theory, practice and policy implications. Cham: Springer, pp. 95–115.

Godfrey, D; Handscomb, G. (2019). Evidence use, research-engaged schools and the concept of an ecosystem In: Godfrey, D, Brown, C C (eds.), An Ecosystem for Research-Engaged Schools: Reforming education through research. London: Routledge, pp. 4–21.

Grayson, H. (2019). School accountability in England: A critique. Headteacher Update, January 10 2019 Online. www.headteacher-update.com/best-practice-article/school-accountability-in-england-a-critique/200743/ . accessed 11 September 2020

Greany, T. (2020). Self-policing or self-improving? Analysing peer reviews between schools in England through the lens of isomorphism In: Godfrey, D (ed.), School Peer Review for Educational Improvement and Accountability: Theory, practice and policy implications. Cham: Springer, pp. 71–94.

Gutknecht-Gmeiner, M. (2013). Peer review as external evaluation in vocational training and adult education: Definition, experiences and recommendations for use. Edukacja ustawiczna dorosłych 1 (80) : 84–91. Online. http://cejsh.icm.edu.pl/cejsh/element/bwmeta1.element.desklight-e9d3b2bf-d5a1-4f10-ba74-98087fe3df6c . accessed 28 August 2020

Handscomb, G; MacBeath, J. (2003). The Research Engaged School. Chelmsford: Essex County Council.

Hargreaves, D. (2007). Teaching as a research-based profession: Possibilities and prospects (The Teacher Training Agency Lecture 1996) In: Hammersley, M (ed.), Educational Research and Evidence-Based Practice. London: SAGE Publications, pp. 3–17.

Hattie, J; Timperley, H. (2007). The power of feedback. Review of Educational Research 77 (1) : 81–112, Online. DOI: http://dx.doi.org/10.3102/003465430298487

Kemmis, S. (2009). Action research as a practice-based practice. Educational Action Research 17 (3) : 463–74, Online. DOI: http://dx.doi.org/10.1080/09650790903093284

Matthews, A.P; Headon, M. (2015). Multiple Gains: An independent evaluation of Challenge Partners’ peer reviews of schools. London: Institute of Education Press.

Montecinos, C; Cortez Muñoz, M; Campos, F; Godfrey, D. (2020). Multivoicedness as a tool for expanding school leaders’ understandings and practices for school-based professional development. Professional Development in Education 46 (4) : 677–90, Online. DOI: http://dx.doi.org/10.1080/19415257.2020.1770841

Munby, S; Fullan, M. (2016). Inside-out and Downside-up: How leading from the middle has the power to transform education systems. London: Education Development Trust. Online. www.educationdevelopmenttrust.com/EducationDevelopmentTrust/files/51/51251173-e25d-4b34-80ae-033fcd7685ab.pdf . accessed 26 August 2020

NAHT (National Association of Head Teachers). The Principles of Effective School-to-School Peer Review. Haywards Heath: National Association of Head Teachers. Online. www.naht.org.uk/news-and-opinion/news/structures-inspection-and-accountability-news/the-principles-of-effective-school-to-school-peer-review/ . accessed 15 January 2020

Nutley, S; Jung, T; Walter, I. (2008). The many forms of research-informed practice: A framework for mapping diversity. Cambridge Journal of Education 38 (1) : 53–71, Online. DOI: http://dx.doi.org/10.1080/03057640801889980

OECD (Organisation for Economic Co-operation and Development). Synergies for Better Learning: An international perspective on evaluation and assessment. Paris: OECD Publishing, pp. 383–485, Online. DOI: http://dx.doi.org/10.1787/9789264190658-10-en

Perryman, J; Maguire, M; Braun, A; Ball, S. (2018). Surveillance, governmentality and moving the goalposts: The influence of Ofsted on the work of schools in a post-panoptic era. British Journal of Educational Studies 66 (2) : 145–63, Online. DOI: http://dx.doi.org/10.1080/00071005.2017.1372560

Rincón-Gallardo, S; Fullan, M. (2016). Essential features of effective networks in education. Journal of Professional Capital and Community 1 : 5–22, Online. DOI: http://dx.doi.org/10.1108/JPCC-09-2015-0007

Rittel, H.W; Webber, M.M. (1974). Wicked problems. Man-Made Futures 26 (1) : 272–80.

Robinson, V. (2018). Reduce Change to Increase Improvement. Thousand Oaks, CA: Corwin.

Robinson, V.M; Timperley, H. (2013). School improvement through theory engagement In: Lai, M, Kushner, S S (eds.), A Developmental and Negotiated Approach to School Self-Evaluation. Bingley: Emerald Group Publishing, pp. 163–77.

Sahlberg, P. (2011). Finnish Lessons: What can the world learn from educational change in Finland. New York: Teachers College Press.

Sappington, N; Baker, P.J; Gardner, D; Pacha, J. (2010). A signature pedagogy for leadership education: Preparing principals through participatory action research. Planning and Changing 41 (3–4) : 249–73.

Schleicher, A. (2018). World Class: How to build a 21st-century school system. Paris: OECD Publishing.

Schön, D. (2001). From technical rationality to reflection-in-action In: Harrison, R, Reeve, F; F and Hanson, A; A, Clarke, J J (eds.), Supporting Lifelong Learning. London: Routledge, pp. 50–71.

Sharp, C; Eames, A; Sanders, D; Tomlinson, K. (2005). Postcards from Research-Engaged Schools. Slough: National Foundation for Educational Research.

Shulman, L.S. (2005). Signature pedagogies in the professions. Daedalus 134 (3) : 52–9, Online. DOI: http://dx.doi.org/10.1162/0011526054622015

Somekh, B. (2005). Action Research: A methodology for change and development. Maidenhead: McGraw-Hill Education.

Stavros, J.M; Godwin, L.N; Cooperrider, D.L. (2015). Appreciative inquiry: Organization development and the strengths revolution In: Rothwell, W.J, Stavros, J; J and Sullivan, R.L R.L (eds.), Practicing Organization Development: Leading transformation and change. Hoboken, NJ: Wiley, pp. 96–116.

Stoll, L. (2015). Using evidence, learning and the role of professional learning communities In: Brown, C (ed.), Leading the Use of Research and Evidence in Schools. London: Institute of Education Press.

Swaffield, S; MacBeath, J. (2005). School self-evaluation and the role of a critical friend. Cambridge Journal of Education 35 (2) : 239–52, Online. DOI: http://dx.doi.org/10.1080/03057640500147037

Wilkins, R. (2011). Research Engagement for School Development. London: Institute of Education.